This lesson adapts the implementation for regression trees from

- Lessons 5 & 7 from Jeremy Howard's Practical Machine Learning for Coders course

to binary classificaion tasks. You may also find the following references useful for conceptual understanding:

- Machine Learning Recipes #8 by Josh Gordon

- A visual introduction to machine learning by Stephanie Yee and Tony Chu

- Object-Oriented Programming (OOP) in Python 3 by Real Python

The data

We will continue to use the SUSY dataset from lesson 1, but this time using a random sample of 100,000 events:

susy_sample.feather

# reload modules before executing user code

%load_ext autoreload

# reload all modules every time before executing Python code

%autoreload 2

# render plots in notebook

%matplotlib inline

# uncomment this if running locally or on Google Colab

# !pip install --upgrade hepml

# data wrangling

import pandas as pd

import numpy as np

from pathlib import Path

from hepml.core import display_large, download_dataset

# data viz

import matplotlib.pyplot as plt

from sklearn.tree import plot_tree

# ml magic

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import roc_auc_score

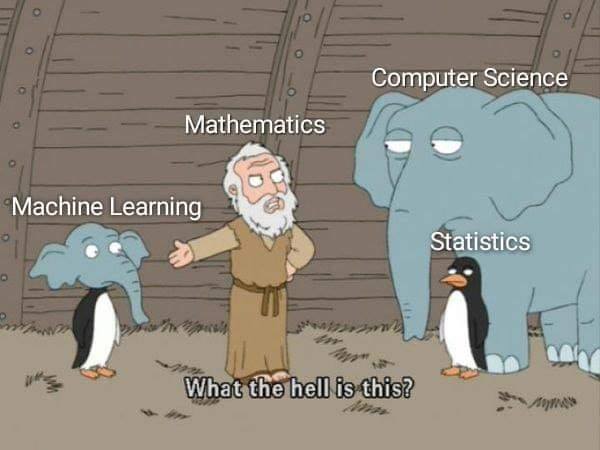

Figure reference: https://bit.ly/2S7XuJP

A good strategy when implementing any algorithm from scratch is to compare against an existing implementation. This is especially true for machine learning algorithms, where there's many ways to fail silently by introducing bugs in the data manipulation steps that do not throw an error, but result in worse performance in the target metric.

To that end, we will use the same sample of 100,000 events from the SUSY dataset that we analysed in lessons 1 and 2, and to simplify the analysis during development we'll start by picking just two features to build our Random Forest on. At each step, we will compare our implementation against scikit-learn's RandomForestClassifier as a way of testing the correctness of our code.

As usual, we can download our dataset by using our download_dataset helper function:

download_dataset("susy_sample.feather")

DATA = Path("../data")

!ls {DATA}

With pathlib it is a simple matter to define the filepath to the dataset, and since the file is in Feather format we can load it as a pandas.DataFrame as follows:

susy_sample = pd.read_feather(DATA / "susy_sample.feather")

susy_sample.head()

# sanity check on number of events

assert len(susy_sample) == 100_000

Next we create the feature matrix $X$ and target vector $y$, along with the training and validation sets:

X = susy_sample.drop("signal", axis=1)

y = susy_sample["signal"]

X_train, X_valid, y_train, y_valid = train_test_split(X, y, test_size=0.2, random_state=42)

print(f"Dataset split: {len(X_train)} train rows + {len(X_valid)} valid rows")

# pick 2 features to experiment with

X_train_sub = X_train[["missing energy magnitude", "lepton 1 pT"]].copy()

X_valid_sub = X_valid[["missing energy magnitude", "lepton 1 pT"]].copy()

To get started, we need a data structure that will represent an ensemble of decision trees. As a first guess, the variables we'll need to initialise our ensemble might include:

X: the feature matrixy: the target vectorn_trees: how many trees to include in the forestsample_size: how big we want each sample to bemin_samples_leaf: some optional hyperparameter that controls the minimum number of samples required to be at a leaf node

With these considerations, let's go ahead and build our ensemble class

class TreeEnsemble:

# instance attributes

def __init__(

self, X: np.ndarray, y: np.ndarray, n_trees: int, sample_size: int, min_samples_leaf: int = 5,

):

# use constant random seed for testing

np.random.seed(42)

self.X = X

self.y = y

self.n_trees = n_trees

self.sample_size = sample_size

self.min_samples_leaf = min_samples_leaf

self.trees = [self.create_tree() for i in range(n_trees)]

# instance method

def create_tree(self):

# grab random subsample without replacement - no bootstrapping!

random_indices = np.random.permutation(len(self.y))[: self.sample_size]

return DecisionTree(

self.X.iloc[random_indices], self.y.iloc[random_indices], min_samples_leaf=self.min_samples_leaf,

)

# instance method

def predict(self, X):

return np.mean([t.predict(X) for t in self.trees], axis=0)

__init__() method to initialize an object’s initial attributes by giving them their default value. This method must have at least one argument as well as the self variable, which refers to the object itself (e.g., TreeEnsemble).Next let's try to instantiate this class:

rf = TreeEnsemble(X_train_sub, y_train, n_trees=10, sample_size=1000, min_samples_leaf=3)

Oops! Our TreeEnsemble assumes we have a class called DecisionTree, so let's create a minimal version that will allow us to instantiate it:

class DecisionTree:

def __init__(self, X: np.ndarray, y: np.ndarray, indices=None, min_samples_leaf: int = 5):

self.X = X

self.y = y

self.indices = indices

self.min_samples_leaf = min_samples_leaf

rf = TreeEnsemble(X_train_sub, y_train, n_trees=10, sample_size=1000, min_samples_leaf=3)

# test we can access attributes

rf.trees

Our DecisionTree class doesn't do anything yet, so let's add some logic to flesh it out. In addition to passing X, y, and min_samples_leaf to the constructor, we also need to keep track of which row indices are passed to the left- and right-hand sides of the tree. Since the root node contains all the rows, we can use None as a default and set indices to the length of y:

class DecisionTree:

def __init__(self, X: np.ndarray, y: np.ndarray, indices: np.ndarray = None, min_samples_leaf: int = 5):

# use all rows for root node

if indices is None:

indices = np.arange(len(y))

self.X = X

self.y = y

self.indices = indices

self.min_samples_leaf = min_samples_leaf

self.n_samples, self.n_features = len(indices), X.shape[1]

self.value = self.calculate_class_probabilities(self.y, self.indices)

self.gini = self.gini_impurity(self.y)

self.score = float("inf")

self.find_feature_split()

# this just does one decision - we'll make it recursive later

def find_feature_split(self):

for i in range(self.n_features):

self.find_better_split(i)

# we'll write this later!

def find_better_split(self, feature_index):

pass

# we'll write this later!

def calculate_class_probabilities(self, y, indices):

pass

# we'll write this later!

def gini_impurity(self, y):

pass

@property

def split_name(self):

return self.X.columns[self.feature_index]

@property

def split_feature(self):

return self.X.values[self.indices, self.feature_index]

@property

def is_leaf(self):

return self.score == float("inf")

# convert object to string - see https://dbader.org/blog/python-repr-vs-str

def __repr__(self):

s = f"n_samples: {self.n_samples}; value: {self.value}; gini: {self.gini}"

if not self.is_leaf:

s += f"; score: {self.score}; feature: {self.split_name}; split_point: {self.split}"

return s

Next let's check we can instantiate the ensemble and access the first decision tree:

rf = TreeEnsemble(X_train_sub, y_train, n_trees=10, sample_size=1000, min_samples_leaf=3)

rf.trees[0]

It works! Our __repr__ method now returns useful information about the attributes of the class instance, but we still need to implement methods for the class probabilities and Gini impurity. Let's consider each one in turn.

In order to average the predictions across multiple trees in our forest, we need know what percentage of a given leaf node contains signal events and what percentage contains background. A simple way to do that is via the np.unique method, e.g.

# returns tuple - (class_labels, class_counts)

np.unique(y_train, return_counts=True)

This is not quite what we need because it just provides the raw counts, so let's normalise them as follows:

np.unique(y_train, return_counts=True)[1] / len(y_train)

We can wrap this in a single function that also handles the cases where we only have one sample in a leaf node:

def calculate_class_probabilities(self, y: pd.Series, indices: np.ndarray):

probs = np.unique(y.iloc[indices], return_counts=True)[1] / len(y.iloc[indices])

# handle edge case when leaf only contains one sample

if len(probs) == 1:

if int(y.iloc[indices].values[0]) == 0:

value = np.array([1.0, 0.0])

else:

value = np.array([0.0, 1.0])

else:

value = probs

return value

We can now use this function to override our class method as follows:

DecisionTree.calculate_class_probabilities = calculate_class_probabilities

Finally, let's check that the function works as intended:

rf = TreeEnsemble(X_train_sub, y_train, n_trees=10, sample_size=1000, min_samples_leaf=3)

rf.trees[0]

The next piece of information we need to calculate is the Gini impurity at a given node in the tree. Recall that the formula for Gini impurity is

$$ G_i = 1 - \sum_{k=1}^n p_{i,k}^2 $$

where $p_{i,k}$ is the ratio of class $k$ examples among the training examples in the ith node. We can get the values of $p_{i,k}$ by using the np.unique method again, e.g.

y_pure = np.array([1, 1, 1, 1])

y_mixed = np.array([1, 0, 1, 0])

gini_pure = 1.0 - np.sum((np.unique(y_pure, return_counts=True)[1] / len(y_pure)) ** 2)

gini_mixed = 1.0 - np.sum((np.unique(y_mixed, return_counts=True)[1] / len(y_mixed)) ** 2)

print(f"Gini pure = {gini_pure}; Gini mixed = {gini_mixed}")

Let's wrap this logic in a function and override the one defined in our DecisionTree class:

def gini_impurity(self, y):

return 1.0 - np.sum((np.unique(y, return_counts=True)[1] / len(y)) ** 2)

DecisionTree.gini_impurity = gini_impurity

As before, we do a sanity check to see whether it works:

rf = TreeEnsemble(X_train_sub, y_train, n_trees=10, sample_size=1000, min_samples_leaf=3)

rf.trees[0]

🎉🎉🎉

Now that we are able to calculate the probabilities and Gini impurity of a Decision Tree's node, the next step is to implement the logic for the find_better_split function. To test that we are on the right track, let us first feed the same random sample from our ensemble to scikit-learns' RandomForestClassifier:

# create ensemble with 1 tree

rf = TreeEnsemble(X_train_sub, y_train, n_trees=1, sample_size=1000)

# extract DecisionTree instance

tree = rf.trees[0]

# extract sample associated with the Decision Tree

X_samp, y_samp = tree.X, tree.y

X_samp.columns

# create Decision Tree with same configuration as our algorithm

model = RandomForestClassifier(n_estimators=1, max_depth=1, bootstrap=False, random_state=42, max_features=None)

model.fit(X_samp, y_samp)

To aid with the comarison, let's also create a plotting function so we can visualise the nodes of our decision trees:

def draw_tree(fitted_model):

# get column names

feature_names = X_train_sub.columns

# define class names

class_names = ["Background", "Signal"]

fig, ax = plt.subplots(figsize=(30, 10))

# generate tree plot

plot_tree(

fitted_model.estimators_[0],

filled=True,

feature_names=feature_names,

class_names=class_names,

ax=ax,

fontsize=18,

proportion=True,

)

plt.show()

draw_tree(model)

To recreate this split, recall that scikit-learn uses the Classification And Regression Tree (CART) algorithm to train Decision Trees. Here the key idea is to search for the pair (feature, split_point) the produces the purest subsets, as measured by their Gini impurity. The function to minimise is given by

$$ J(k, t_k) = \frac{m_\mathrm{left}}{m} G_\mathrm{left} + \frac{m_\mathrm{right}}{m}G_\mathrm{right} $$

where $k$ is a single feature, $t_k$ is the split point (e.g. "missing energy magnitude $\leq$ 1.14), $m_\mathrm{left/right}$ is the number of examples in the left/right subset, and $G_\mathrm{left/right}$ is the Gini impurity. Thus for each feature $k$, the strategy will be to calculate $J(k, t_k)$ for every possible split point $t_k$ and update the score if it less than what we have seen already. Implemented as code, the result could look like:

def find_better_split(self, feature_index):

# get sample for a single feature

X, y = self.X.values[self.indices, feature_index], self.y.iloc[self.indices]

for t in range(self.n_samples):

# create boolean masks for samples above / below the split point t

lhs = X <= X[t]

rhs = X > X[t]

# continue if stopping criterion not reached

if rhs.sum() < self.min_samples_leaf or lhs.sum() < self.min_samples_leaf:

continue

# calculate Gini impurities for left and right nodes

lhs_gini = gini_impurity(self, y[lhs])

rhs_gini = gini_impurity(self, y[rhs])

# calculate score for current split point

curr_score = lhs_gini * len(y[lhs]) / len(y) + rhs_gini * len(y[rhs]) / len(y)

# update attributes if new score is smaller than old

if curr_score < self.score:

self.feature_index, self.score, self.split, self.gini = (

feature_index,

curr_score,

X[t],

gini_impurity(self, y),

)

Let's check this works by first calculating the best split point for lepton 1 pT:

%timeit find_better_split(tree, feature_index=1)

tree

Similarly for missing energy magnitude we find:

find_better_split(tree, feature_index=0)

tree

It works! We see that missing energy magnitude has a lower score (i.e. cost $J$) than lepton 1 pT, and produces class probabilities and a Gini impurity consistent with scikit-learn. The downside is that our implementation is slow - we can do better.

One problem with our implementation above is that we're repeatedly checking whether every value is greater or less than a given split point $t_k$. We can speed up the algorithm by first sorting the arrays and then observing that we can compute the Gini impurities efficiently by finding the index that separates the two groups (i.e. signal vs background).

To that end, let's reset our Decision Tree:

tree = TreeEnsemble(X_train_sub, y_train, 1, 1000).trees[0]

def find_better_split(self, feature_index):

X, y = self.X.values[self.indices, feature_index], self.y.values[self.indices]

sorted_indices = np.argsort(X)

y_sorted, X_sorted = y[sorted_indices], X[sorted_indices]

index = 0

for i in range(0, self.n_samples - self.min_samples_leaf):

index += 1

Xi = X_sorted[i]

# continue if stopping criterion not reached

if i < self.min_samples_leaf - 1 or Xi == X_sorted[i + 1]:

continue

# calculate Gini impurities for left and right nodes

lhs_gini = gini_impurity(self, y_sorted[:index])

rhs_gini = gini_impurity(self, y_sorted[index:])

# calculate score for current split point

curr_score = lhs_gini * len(y_sorted[:index]) / len(y_sorted) + rhs_gini * len(y_sorted[index:]) / len(y_sorted)

# update attributes if new score is smaller than old

if curr_score < self.score:

self.feature_index, self.score, self.split, self.gini = (

feature_index,

curr_score,

X_sorted[i],

gini_impurity(self, y),

)

%timeit find_better_split(tree, feature_index=1)

tree

Not bad - we've reduced the complexity of our original algorithm from $O(n^2)$ to $O(n)$ to gain roughly a factor of 60x speed up! Let's check we recover the same result for missing energy magnitude as before:

find_better_split(tree, feature_index=0)

tree

Finally, we can override the function in our DecisionTree class so we can access it from our TreeEnsemble:

DecisionTree.find_better_split = find_better_split

tree = TreeEnsemble(X_train, y_train, 1, 1000).trees[0]

tree

So far we have worked out how to find the best split point for single now - we are now ready to tackle building the whole tree! Let's increase the depth in our scikit-learn model to compare against:

model = RandomForestClassifier(n_estimators=1, max_depth=2, bootstrap=False, random_state=42, max_features=None)

model.fit(X_samp, y_samp)

draw_tree(model)

Recall that our DecisionTree class has a simple function to find the best split:

def find_feature_split(self):

for i in range(self.n_features):

self.find_better_split(i)

This is not quite sufficient it only checks for the best split at the root node, when in fact we need to check whether is a better split in the left and right child nodes. The following change to the code takes care of this:

def find_feature_split(self):

for i in range(self.n_features):

self.find_better_split(i)

if self.is_leaf:

return

X = self.split_feature

# grab indices for left and right nodes

lhs = np.nonzero(X <= self.split)[0]

rhs = np.nonzero(X > self.split)[0]

# recursively calculate decision trees for all nodes

self.lhs = DecisionTree(self.X, self.y, self.indices[lhs])

self.rhs = DecisionTree(self.X, self.y, self.indices[rhs])

Now we can override the function in our class and test we can reproduce the numbers in our scikit-learn tree:

DecisionTree.find_feature_split = find_feature_split

tree = TreeEnsemble(X_samp, y_samp, 1, 1000).trees[0]

tree

tree.lhs

tree.rhs

tree.lhs.lhs

tree.lhs.rhs

tree.rhs.lhs

tree.rhs.rhs

Hooray, it works 🥳🥳🥳!

Now that we have something that can train a Decision Tree, the next step is to implement the methods to generate predictions. Although we have TreeEnsemble.predict we do not have anything like this yet for DecisionTree. To make things more interesting, let's add a few more columns to our training set:

cols = ["missing energy magnitude", "lepton 1 pT", "axial MET", "M_TR_2", "M_R", "S_R"]

X_train_sub = X_train[cols].copy()

X_valid_sub = X_valid[cols].copy()

Let's create our tree ensemble again to make sure we can compare against scikit-learn:

%time tree = TreeEnsemble(X_train_sub, y_train, 1, 1000).trees[0]

X_samp, y_samp = tree.X, tree.y

model = RandomForestClassifier(

n_estimators=1, max_depth=None, bootstrap=False, max_features=None, random_state=42, min_samples_leaf=5,

)

model.fit(X_samp, y_samp)

To generate the predictions, we simply need to loop over each row in $X$ and build the array as follows:

def predict(self, X):

return np.array([self.predict_row(Xi) for Xi in X])

DecisionTree.predict = predict

The final step is to implement predict_row, which is mostly trivial: if we're at a leaf note we return the class label with the highest probability, otherwise we figure out whether to traverse to the left or right child node, and so on:

def predict_row(self, Xi):

if self.is_leaf:

return self.value

t = self.lhs if Xi[self.feature_index] <= self.split else self.rhs

return t.predict_row(Xi)

DecisionTree.predict_row = predict_row

With this we can now calculate our class probabilities:

%time probs = tree.predict(X_valid_sub.values)[:, 1]

and evaluate the ROC AUC score on the validation set:

roc_auc_score(y_valid, probs)

How does this compare with scikit-learn's implementation? Let's find out by calculating their predictions and evaluating on the validation set:

probs_sklearn = model.predict_proba(X_valid_sub)[:, 1]

roc_auc_score(y_valid, probs_sklearn)

With a difference of < 1%, our implementation does not perform too badly at all!

Let's wrap this up by collecting all of our class functions into two dedicated classes:

class TreeEnsemble:

# instance attributes

def __init__(

self, X: np.ndarray, y: np.ndarray, n_trees: int, sample_size: int, min_samples_leaf: int = 5,

):

# use constant random seed for testing

np.random.seed(42)

self.X = X

self.y = y

self.n_trees = n_trees

self.sample_size = sample_size

self.min_samples_leaf = min_samples_leaf

self.trees = [self.create_tree() for i in range(n_trees)]

# instance method

def create_tree(self):

# grab random subsample without replacement - no bootstrapping!

random_indices = np.random.permutation(len(self.y))[: self.sample_size]

return DecisionTree(

self.X.iloc[random_indices], self.y.iloc[random_indices], min_samples_leaf=self.min_samples_leaf,

)

# instance method

def predict(self, X):

return np.mean([t.predict(X) for t in self.trees], axis=0)

class DecisionTree:

def __init__(self, X, y, indices=None, min_samples_leaf=5):

# use all rows for root node

if indices is None:

indices = np.arange(len(y))

self.X = X

self.y = y

self.indices = indices

self.min_samples_leaf = min_samples_leaf

self.n_samples, self.n_features = len(indices), X.shape[1]

self.value = self.calculate_class_probabilities(self.y, self.indices)

self.gini = self.gini_impurity(self.y)

self.score = float("inf")

self.find_feature_split()

def find_feature_split(self):

for i in range(self.n_features):

self.find_better_split(i)

if self.is_leaf:

return

X = self.split_feature

# grab indices for left and right nodes

lhs = np.nonzero(X <= self.split)[0]

rhs = np.nonzero(X > self.split)[0]

# recursively calculate decision trees for all nodes

self.lhs = DecisionTree(self.X, self.y, self.indices[lhs])

self.rhs = DecisionTree(self.X, self.y, self.indices[rhs])

def find_better_split(self, feature_index):

X, y = self.X.values[self.indices, feature_index], self.y.values[self.indices]

sorted_indices = np.argsort(X)

y_sorted, X_sorted = y[sorted_indices], X[sorted_indices]

index = 0

for i in range(0, self.n_samples - self.min_samples_leaf):

index += 1

Xi = X_sorted[i]

# continue if stopping criterion not reached

if i < self.min_samples_leaf - 1 or Xi == X_sorted[i + 1]:

continue

# calculate Gini impurities for left and right nodes

lhs_gini = gini_impurity(self, y_sorted[:index])

rhs_gini = gini_impurity(self, y_sorted[index:])

# calculate score for current split point

curr_score = lhs_gini * len(y_sorted[:index]) / len(y_sorted) + rhs_gini * len(y_sorted[index:]) / len(

y_sorted

)

# update attributes if new score is smaller than old

if curr_score < self.score:

self.feature_index, self.score, self.split, self.gini = (

feature_index,

curr_score,

X_sorted[i],

gini_impurity(self, y),

)

def calculate_class_probabilities(self, y: pd.Series, indices: np.ndarray):

probs = np.unique(y.iloc[indices], return_counts=True)[1] / len(y.iloc[indices])

# handle edge case when leaf only contains one sample

if len(probs) == 1:

if int(y.iloc[indices].values[0]) == 0:

value = np.array([1.0, 0.0])

else:

value = np.array([0.0, 1.0])

else:

value = probs

return value

def gini_impurity(self, y):

return 1.0 - np.sum((np.unique(y, return_counts=True)[1] / len(y)) ** 2)

def predict(self, X):

return np.array([self.predict_row(Xi) for Xi in X])

def predict_row(self, Xi):

if self.is_leaf:

return self.value

t = self.lhs if Xi[self.feature_index] <= self.split else self.rhs

return t.predict_row(Xi)

@property

def split_name(self):

return self.X.columns[self.feature_index]

@property

def split_feature(self):

return self.X.values[self.indices, self.feature_index]

@property

def is_leaf(self):

return self.score == float("inf")

# convert object to string - see https://dbader.org/blog/python-repr-vs-str

def __repr__(self):

s = f"n_samples: {self.n_samples}; value: {self.value}; gini: {self.gini}"

if not self.is_leaf:

s += f"; score: {self.score}; feature: {self.split_name}; split_point: {self.split}"

return s

As a sanity check, let's evaluate the performance on a single tree:

tree = TreeEnsemble(X_train_sub, y_train, n_trees=1, sample_size=1000).trees[0]

X_samp, y_samp = tree.X, tree.y

# calculate class probabilities

probs = tree.predict(X_valid_sub.values)[:, 1]

# evaluate

roc_auc_score(y_valid, probs)

This matches our earlier result, so let's now roll-out to a full forest and compare against scikit-learn:

%time rf = TreeEnsemble(X_train_sub, y_train, n_trees=5, sample_size=1000)

probs = rf.predict(X_valid_sub.values)[:, 1]

roc_auc_score(y_valid, probs)

model = RandomForestClassifier(n_estimators=5, bootstrap=False, max_features=None, random_state=42, min_samples_leaf=5)

model.fit(X_train_sub, y_train)

probs_sklearn = model.predict_proba(X_valid_sub)[:, 1]

roc_auc_score(y_valid, probs_sklearn)

The final results are not the same, but ours did a little better, possibly due to differences in how we average the predictions across trees. In any case, we can be happy that our implementation that we wrote entirely from scratch is competitive with a well tested implementation!

Although our Random Forest implementation did OK on the ROC AUC score, its runtime performance leaves a lot to be desired. One way we could improve this is by following scikit-learn, who typically implement their algorithms in Cython, which allows you to generate efficient C code from Python. To use Cython in a Jupyter notebook we call the magic function:

%load_ext Cython

and then can compare the pure Python function

def fib1(n):

a, b = 0, 1

while b < n:

a, b = b, a + b

%timeit fib1(50)

against it's compiled version

%%cython

def fib2(n):

a, b = 0, 1

while b < n:

a, b = b, a + b

%timeit fib2(50)

We can gain even more performance gains by declaring the types explicitly:

%%cython

def fib3(int n):

cdef int b = 1

cdef int a = 0

cdef int t = 0

while b < n:

t = a

a = b

b = t + b

%timeit fib3(50)

In practice, I find that Numba provides a powerful alternative to Cython and does away with the need to learn new data types etc. To use Numba one simply needs to import it and add a decorator to your functions:

from numba import njit

@njit

def fib4(n):

a, b = 0, 1

while b < n:

a, b = b, a + b

%timeit fib4(50)

This is especially useful when working with NumPy functions, consider for example the generation of the Mandelbrot set using NumPy:

# example from - https://ipython-books.github.io/52-accelerating-pure-python-code-with-numba-and-just-in-time-compilation/

size = 400

iterations = 100

def mandelbrot_1(size, iterations):

m = np.zeros((size, size))

for i in range(size):

for j in range(size):

c = -2 + 3.0 / size * j + 1j * (1.5 - 3.0 / size * i)

z = 0

for n in range(iterations):

if np.abs(z) <= 10:

z = z * z + c

m[i, j] = n

else:

break

return m

m = mandelbrot_1(size, iterations)

fig, ax = plt.subplots(1, 1, figsize=(10, 10))

ax.imshow(np.log(m), cmap=plt.cm.hot)

ax.set_axis_off()

%timeit mandelbrot_1(size, iterations)

Adding @njit as a decorator gives a 300x speedup!

@njit

def mandelbrot_2(size, iterations):

m = np.zeros((size, size))

for i in range(size):

for j in range(size):

c = -2 + 3.0 / size * j + 1j * (1.5 - 3.0 / size * i)

z = 0

for n in range(iterations):

if np.abs(z) <= 10:

z = z * z + c

m[i, j] = n

else:

break

return m

%timeit mandelbrot_2(size, iterations)

Exercises

- An alternative measure of impurity is the so-called Shannon entropy, which measures the average information content of a message. In the context of classification, a set's entropy is zero when it contains examples of only one class. Use the definition of entropy

$$ H_i = \sum_{k=1}^n p_{i,k} \log p_{i,k} $$

to extend our

DecisionTreeclass with a newcriterionhyperparameter that allows one to use Gini impurity or entropy as a measure of a node's impurity. - In our implementation of

TreeEnsemblewe use all the features at every node to find the best split point. Extend the functionality by introducing amax_featureshyperparameter that controls the number of features used at every node in a manner similar to scikit-learn.