- Gain an introduction to the pandas library

- Understand the main steps involved in training a machine learning model

- Gain an introduction to scikit-learn's API

- Understand the need to generate a training and validation set

- Chapters 2 & 3 of Hands-On Machine Learning with Scikit-Learn and TensorFlow by Aurèlien Geron

- Section VII of A high-bias, low-variance introduction to Machine Learning for physicists by P. Mehta et al.

- Searching for exotic particles in high-energy physics with deep learning by Baldi et al.

The data

We will be analysing SUSY data from the Searching for exotic particles in high-energy physics with deep learning article by Baldi et al. (2014). The dataset can be obtained from the UCI Machine Learning repository and is described as follows:

The data has been produced using Monte Carlo simulations. The first 8 features are kinematic properties measured by the particle detectors in the accelerator. The last ten features are functions of the first 8 features; these are high-level features derived by physicists to help discriminate between the two classes. There is an interest in using deep learning methods to obviate the need for physicists to manually develop such features. Benchmark results using Bayesian Decision Trees from a standard physics package and 5-layer neural networks and the dropout algorithm are presented in the original paper. The last 500,000 examples are used as a test set.

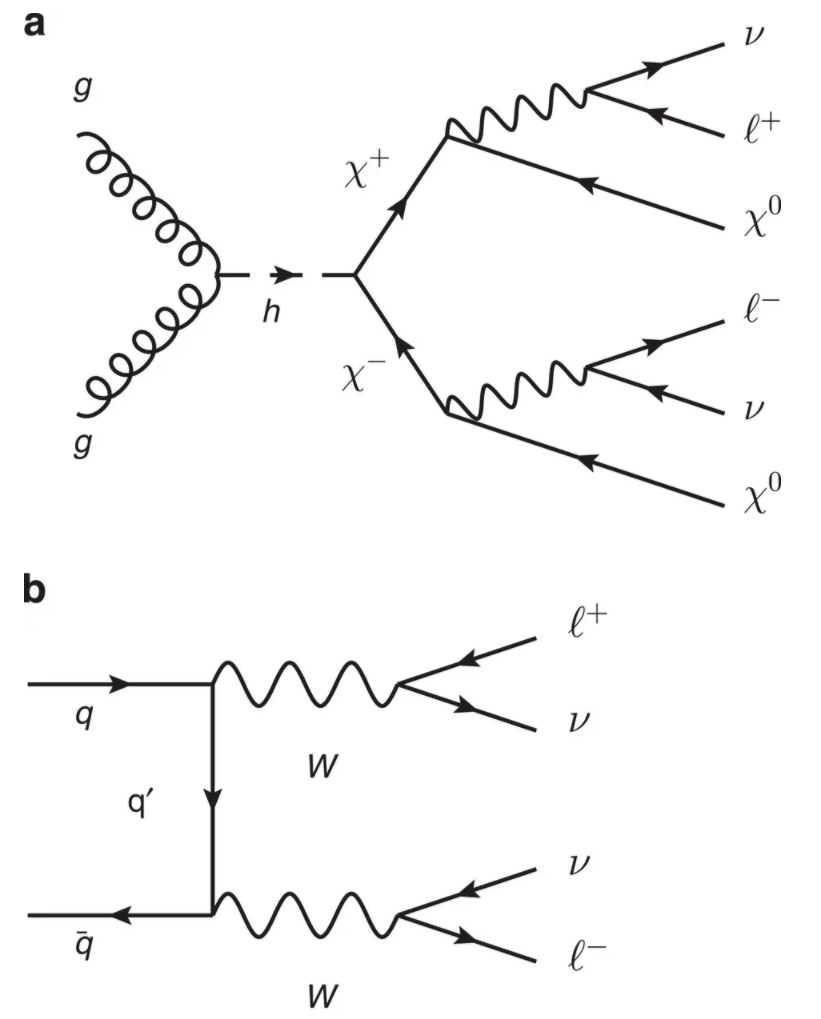

Framed as a supervised learning task, the goal is to train a model that can classify each event as either a SUSY signal or Standard Model background (see figure below).

Figure reference: https://www.nature.com/articles/ncomms5308

The dataset description also provides information on the features or kinematic variables associated with with each event:

The first column is the class label (1 for signal, 0 for background), followed by the 18 features (8 low-level features then 10 high-level features):lepton 1 pT, lepton 1 eta, lepton 1 phi, lepton 2 pT, lepton 2 eta, lepton 2 phi, missing energy magnitude, missing energy phi, MET_rel, axial MET, M_R, M_TR_2, R, MT2, S_R, M_Delta_R, dPhi_r_b, cos(theta_r1).

# reload modules before executing user code

%load_ext autoreload

# reload all modules every time before executing Python code

%autoreload 2

# render plots in notebook

%matplotlib inline

# uncomment this if running locally or on Google Colab

# !pip install --upgrade hepml

# data wrangling

import pandas as pd

import numpy as np

from pathlib import Path

from hepml.core import display_large, download_dataset

# data viz

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.metrics import plot_roc_curve, plot_confusion_matrix

sns.set(color_codes=True)

sns.set_palette(sns.color_palette("muted"))

# ml magic

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score, roc_auc_score

from sklearn.ensemble import RandomForestClassifier

For simplicity, we have stored a copy of the data on Google Drive which can be fetched by running the following function:

# this is a big file and can take a while to download

download_dataset("susy.csv.gz")

To load our dataset we need to tell pandas where to look for it. First, lets have a look at what we have in the data/ directory:

DATA = Path("../data")

!ls {DATA}

With pathlib it is a simple matter to define the filepath to the dataset, and since the file is in a (compressed) CSV format we can load it as a pandas.DataFrame as follows:

columns = [

"signal",

"lepton 1 pT",

"lepton 1 eta",

"lepton 1 phi",

"lepton 2 pT",

"lepton 2 eta",

"lepton 2 phi",

"missing energy magnitude",

"missing energy phi",

"MET_rel",

"axial MET",

"M_R",

"M_TR_2",

"R",

"MT2",

"S_R",

"M_Delta_R",

"dPhi_r_b",

"cos(theta_r1)",

]

# this can take a while to load ...

%time susy = pd.read_csv(DATA / "susy.csv.gz", compression="gzip", names=columns)

Loading and saving large datasets like this one can be a major bottleneck in the exploratory phase of a machine learning project. Fortunately there are several binary formats that can be used for storing data on disk, and Feather is one of the fastest for I/O and memory usage (see benchmark).

Thus one way to speed up our I/O cycles is to first save our pandas.DataFrame to disk in Feather format so we can reuse it in downstream tasks:

susy.to_feather(DATA / "susy.feather")

%time susy = pd.read_feather(DATA/'susy.feather')

That's about 100x speedup factor - not bad!

This first thing you should do after creating a pandas.DataFrame is to inspect the first/last few rows to make sure there's no surprises in the data format that need to be dealt with. For example, one often finds metadata or aggregations at the end of CSV/Excel files, and this can cause problems if not handled correctly.

The DataFrame.head method displays the first five rows of a pandas.DataFrame:

susy.head()

If we want to see more rows, we just pass an integer argument to the method as follows:

susy.head(10)

Similar to DataFrame.head, the DataFrame.tail method returns the last five rows of a pandas.DataFrame:

susy.tail()

Whenever we have a new dataset it is handy to begin by getting an idea of how large the pandas.DataFrame is. This can be done with either the len or DataFrame.shape methods:

# get number of rows

len(susy)

# get tuples of (n_rows, n_columns)

susy.shape

susy.info()

Luckily for us, it looks like we're dealing with purely numeric data types and there's no missing values 🥳🥳🥳! Let's verify the latter is indeed true:

assert susy.isnull().sum().sum() == 0

Another quick way to get a feel for the type of numeric data that you are dealing with is to plot one or more of the following:

- Histograms: shows the number of instances (on the vertical axis) that have a given value range (on the horizontal axis). Useful for understanding the shape of a single variable.

- Count plots: shows the counts of observations in each categorical bin using bars. A count plot can be thought of as a histogram across a categorical, instead of quantitative, variable.

To create these plots we will use the seaborn data visualisation library. In the same way that pd is the accepted alias for pandas, one uses sns as the alias for seaborn.

Histograms are one of the most simple, yet powerful types of data visualisation. They can quickly tell us which values are most frequent, whether there are outliers and more. To create a histogram in seaborn, we can use the seaborn.distplot method:

sns.distplot(susy.query("signal == 1")["axial MET"], kde=False)

sns.distplot(susy.query("signal == 0")["axial MET"], kde=False)

plt.show()

Doing this manually for each feature of interest is tiresome, so let's write a simple function that generalises the process:

def plot_histogram(feature_name: str, df: pd.DataFrame):

sns.distplot(df.query("signal == 1")[feature_name], label="Signal", kde=False)

sns.distplot(df.query("signal == 0")[feature_name], label="Background", kde=False)

plt.legend(bbox_to_anchor=(1.01, 1), loc="upper left")

plt.show()

plot_histogram("lepton 1 pT", susy)

plot_histogram("MET_rel", susy)

Count plots are handy when you have a categorical feature like signal and you want to quickly see the frequencies per category:

sns.countplot(x="signal", data=susy)

plt.show()

susy["signal"].value_counts(normalize=True)

From the plot and numbers we see that the "signal" and "background" classes are nearly balanced, so if we built a dumb classifier that just classifies every single event as "background", then we would be right about 54.2% of the time. Clearly our goal is to do much better than this!

Before diving into the model-building phase, let's create a test set to limit ourselves from the data snooping bias. According to the dataset description the last 500,000 rows are used as the test set in the original article, so let's use the same set here:

susy_train = susy.copy().iloc[:4500000]

susy_test = susy.copy().iloc[-500000:]

# sanity check on number of rows

assert len(susy_train) == 4_500_000

assert len(susy_test) == 500_000

Let's also save these files to disk for later use:

susy_train.to_feather(DATA / "susy_train.feather")

# feather requires a default pandas.DataFrame index

susy_test.reset_index(drop=True).to_feather(DATA / "susy_test.feather")

With 4.5 million events in our dataset, it will be time-consuming to train our models. One way to speed up the rate of iteration, is to select a random sample of 100,000 events to work with:

susy_sample = susy_train.sample(n=100000, random_state=42)

While we are at it, let's create three pandas.DataFrame objects, one for:

- low-level features: these are direct measurements of final state particles, in this case the pT , pseudo-rapidity η, and azimuthal angle φ of two leptons in the event and the amount of missing transverse momentum (MET) together with its azimuthal angle.

- high-level features: these are higher order functions of the first 8 features and derived by physicists to help discriminate between the two classes. These high-level features can be thought of as the physicists’ attempt to use non-linear functions to classify signal and background events, having been developed with formidable theoretical effort.

- all features: the full set of 18 input features

low_features = [

"signal",

"lepton 1 pT",

"lepton 1 eta",

"lepton 1 phi",

"lepton 2 pT",

"lepton 2 eta",

"lepton 2 phi",

"missing energy magnitude",

"missing energy phi",

]

high_features = [

"signal",

"MET_rel",

"axial MET",

"M_R",

"M_TR_2",

"R",

"MT2",

"S_R",

"M_Delta_R",

"dPhi_r_b",

"cos(theta_r1)",

]

susy_low = susy_sample[low_features].copy()

susy_high = susy_sample[high_features].copy()

susy_all = susy_sample.copy()

pandas.DataFrame, it is good practice to use the DataFrame.copy method to make a copy of the original data. The reason for this is due to the way in which pandas distinguishes a "view" and "copy" of a pandas.DataFrame, and not forcing pandas to make a copy can have unintended side-effects in downstream analysis. For an in-depth discussion see https://www.dataquest.io/blog/settingwithcopywarning/Now that we've checked that the training data is clean and free from obvious anomalies, it's time to train our model! To do so, we will make use of the scikit-learn library.

scikit-learn is one of the best known Python libraries for machine learning and provides efficient implementations of a large number of common algorithms. It has a uniform Estimator API as well as excellent online documentation. The main benefit of its API is that once you understand the basic use and syntax of scikit-learn for one type of model, switching to a new model or algorithm is very easy.

Basics of the API

The most common steps one takes when building a model in scikit-learn are:

- Choose a class of model by importing the appropriate estimator class from scikit-learn.

- Choose model hyperparameters by instantiating this class with the desired values.

- Arrange data into a feature matrix and target vector (see discussion below).

- Fit the model to your data by calling the

fit()method. - Evaluate the predictions of the model:

- For supervised learning we typically predict labels for new data using the

predict()method. - For unsupervised learning, we often transform or infer properties of the data using the

transform()orpredict()methods.

- For supervised learning we typically predict labels for new data using the

Let's go through each of these steps to build a Random Forest regressor to predict California housing prices.

In scikit-learn, every class of model is represented by a Python class. We want a Random Forest classifier, so looking at the online docs we should import the RandomForestClasifier:

from sklearn.ensemble import RandomForestClassifier

Once we have chosen our model class, there are still some options open to us:

- What is the maximum depth of the tree? The default is

Nonewhich means the nodes are expanded until all leaves are pure. - Other parameters can be found in the docs, but for now we take a simple model with just 20 trees.

The above choices are often referred to as hyperparameters or parameters that must be set before the model is fit to the data. We can instantiate the RandomForestClassifier class and specify the desired hyperparameters as follows:

model = RandomForestClassifier(n_estimators=10, n_jobs=-1)

scikit-learn requires that the data be arranged into a two-dimensional feature matrix and a one-dimensional target array. By convention:

- The feature matrix is often stored in a variable called

X. This matrix is typically two-dimensional with shape[n_samples, n_features], wheren_samplesrefers to the number of rows (i.e. events in our case) andn_featuresrefers to all columns exceptsignalwhich is our target. - The target or label array is usually denoted by

y.

X = susy_all.drop("signal", axis=1)

y = susy_all["signal"]

Now it is time to apply our model to data! This can be done with the fit() method:

%time model.fit(X, y)

The final step is to generate predictions and evaluate them against some performance metric, e.g. accuracy:

preds = model.predict(X)

accuracy_score(y, preds)

Wait, wat!? How can the Random Forest model have almost no error at all? Is this a perfect model? Of course it is much more likely that the model has badly overfit the data. To be sure we need to use part of the training set for training and part for model validation.

One way to measure how well a model will generalise to new cases is to split your data into two sets: the training set and the validation set. As these names imply, you train your model using the training set and validate it using the validation set. The error rate on new cases is called the generalisation error and by evaluating your model on the validation set, you get an estimation of this error.

Creating a validation set is theoretically quite simple: just pick some instances randomly and set them aside (we set the random number generator's seed random_state so that is always generates the same shuffled indices):

X_train, X_valid, y_train, y_valid = train_test_split(X, y, test_size=0.2, random_state=42)

print(f"Dataset split: {len(X_train)} train rows + {len(X_valid)} valid rows")

With these two datasets, we first fit on the training set and evaluate the prediction on the validation one. To simplify the evaluation of our models, let's write a simple function to keep track of the scores:

def print_scores(fitted_model):

res = {

"Accuracy on train:": accuracy_score(fitted_model.predict(X_train), y_train),

"ROC AUC on train:": roc_auc_score(

y_train, fitted_model.predict_proba(X_train)[:, 1]

),

"Accuracy on valid:": accuracy_score(fitted_model.predict(X_valid), y_valid),

"ROC AUC on valid:": roc_auc_score(

y_valid, fitted_model.predict_proba(X_valid)[:, 1]

),

}

if hasattr(fitted_model, "oob_score_"):

res["OOB accuracy:"] = fitted_model.oob_score_

for k, v in res.items():

print(k, round(v, 3))

model = RandomForestClassifier(n_estimators=10, n_jobs=-1, random_state=42)

model.fit(X_train, y_train)

print_scores(model)

ROC curves and AUC

Note that in addition to accuracy, we also show a second value, the Area Under the ROC Curve (AUC). The AUC is a good summary statistic of the predictiveness of a binary classifier. It varies from zero to one, and a value of 0.5 corresponds to randomness (the classifier cannot distinguish at all between "signal" and "background"), while a value of 1.0 means that it is perfect.

The "ROC" refers to the Receiver Operating Characteristic curve which plots the true positive rate

$$ \mathrm{TPR} = \frac{\mathrm{TP}}{\mathrm{TP} + \mathrm{FP}} \,, \qquad \mathrm{TP\, (FP)} = \mathrm{number\, of\, true\, (false) \,positives}\,,$$

against the false positive rate FPR, where the FPR is the ratio of negative instances that are incorrectly classified as positive. In general there is a tradeoff between these two quantities: the higher the TPR, the more false positives (FPR) the classifier produces.

For our analysis, these ROC curves effectively plot signal efficiency versus background rejection at various thresholds of some discriminating variable. Here that variable will be the output signal probability of our Random Forest model. Let's write a simple function to visualise these outputs:

def plot_signal_vs_background(fitted_model):

valid_probs = model.predict_proba(X_valid)

df = pd.DataFrame({"probability": valid_probs[:, 1]})

df["signal"] = y_valid.values

sns.distplot(

df.query("signal == 1")["probability"],

kde=False,

bins=np.linspace(0, 1, 10),

label="Signal",

)

sns.distplot(

df.query("signal == 0")["probability"],

kde=False,

bins=np.linspace(0, 1, 10),

label="Background",

)

plt.legend(bbox_to_anchor=(1.01, 1), loc="upper left")

plt.show()

plot_signal_vs_background(model)

We see that although the model assigns high (low) probabilities to the signal (background) events, a fair amount of the signal events overlap with background ones. Another way to see this is to look at the $p_T$ distributions of two highest $p_T$ leptons:

# use plt.subplots() to create multiple plots

fig, (ax0, ax1) = plt.subplots(nrows=1, ncols=2, sharey=True, figsize=(12, 6))

# put one plot on axis ax0

sns.scatterplot(

x="lepton 1 pT",

y="lepton 2 pT",

data=susy.query("signal == 0"),

ax=ax0,

color="darkorange",

label="Background",

)

# put second plot on axis ax1

sns.scatterplot(

x="lepton 1 pT",

y="lepton 2 pT",

data=susy.query("signal == 1"),

ax=ax1,

color="b",

label="Signal",

)

# tight_layout() fixes spacing between plots

fig.tight_layout()

These plots show that instead of fixing a single threshold to evaluate our classifier, we should scan across various thresholds as in the ROC curve. In scikit-learn we can visualise the ROC curve of an estimator using the plotting API:

plot_roc_curve(model, X_valid, y_valid)

plt.show()

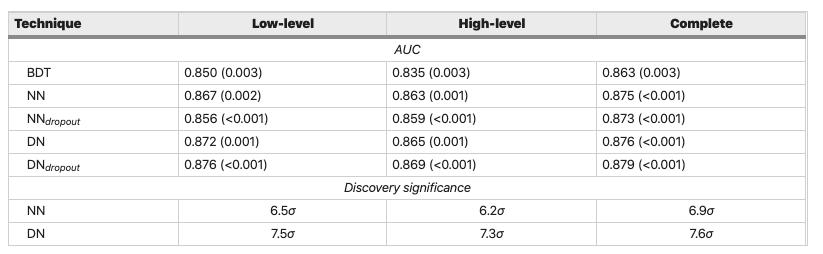

Looking at the scores in the original SUSY article, we see that our super simple model does not perform too badly - with an AUC of around 0.84 it falls short of the benchmark models by around 3-5% with the "complete" features. In the coming lessons we will explore techniques to improve upon this!

Figure reference: https://www.nature.com/articles/ncomms5308

Exercises

- Use the techniques in this lesson to build Random Forest models for the "low-level" and "high-level" set of features. How do their ROC AUC scores compare against the table above?

- Try coming up with some "rectangular" requirements (e.g. applying $p_T$ cuts) and see how the ROC curve for those requirements compares to using a Random Forest. See pp. 33-34 of the review.