Lecture 1 - Jet tagging with neural networks

Contents

Lecture 1 - Jet tagging with neural networks#

A first look at training deep neural networks to classify jets in proton-proton collisions.

Learning objectives#

Understand what jet tagging is and how to frame it as a machine learning task

Understand the main steps needed to train and evaluate a jet tagger

Learn how to download and process data with the 🤗 Datasets library

Gain an introduction to the fastai library and how to push models to the Hugging Face Hub

References#

Chapter 1 of Deep Learning for Coders with fastai & PyTorch by Jeremy Howard and Sylvain Gugger.

The Machine Learning Landscape of Top Taggers by G. Kasieczka et al.

How Much Information is in a Jet? by K. Datta and A. Larkowski.

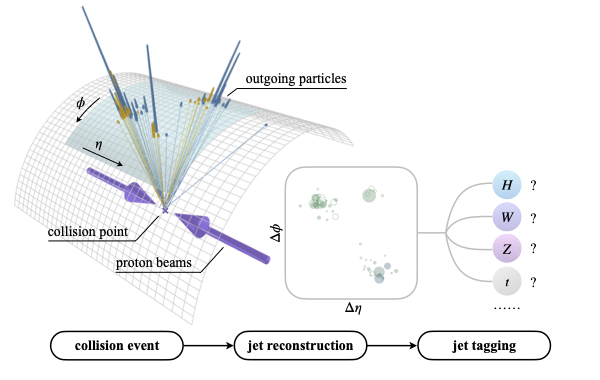

The task and data#

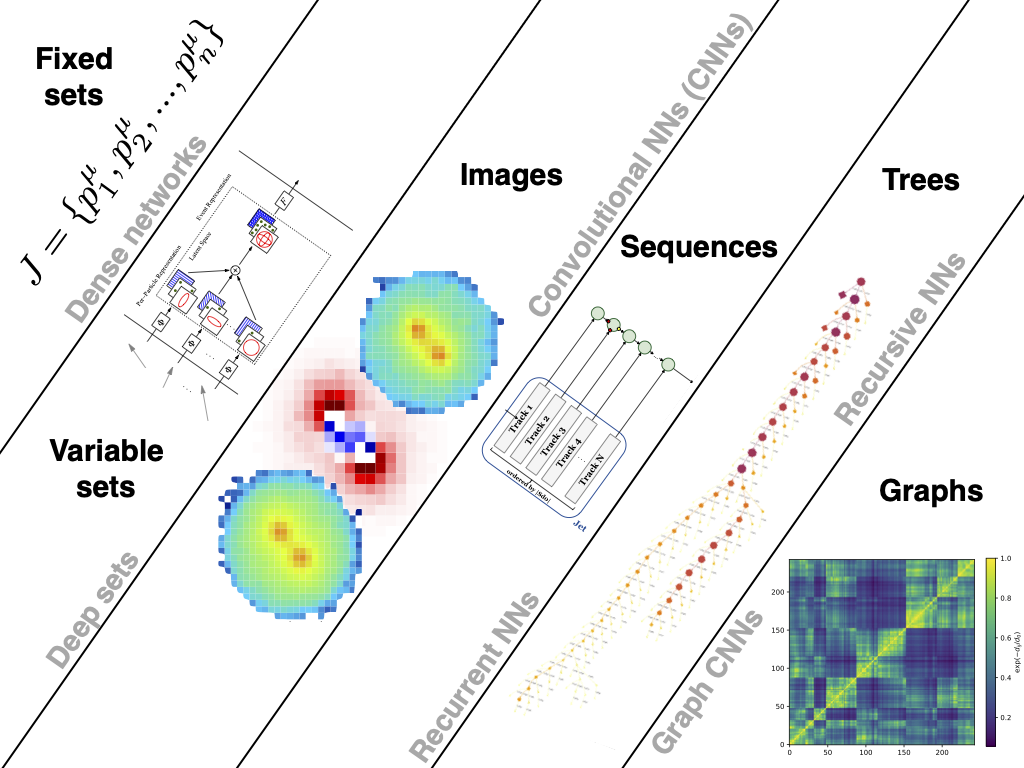

For the first few lectures, we’ll be analysing the Top Quark Tagging dataset, which is a famous benchmark that’s used to compare the performance of jet classification algorithms. The dataset consists of around 2 million Monte Carlo simulated events in proton-proton collisions that have been clustered into jets.

Framed as a supervised machine learning task, the goal is to train a model that can classify each jet as either a top-quark signal or quark-gluon background.

Fig. 2 Figure reference: Particle Transformer for Jet Tagging#

Setup#

# Uncomment and run this cell if using Colab, Kaggle etc

# %pip install fastai==2.6.0 datasets git+https://github.com/huggingface/huggingface_hub

# Check we have the correct fastai version

import fastai

assert fastai.__version__ == "2.6.0"

Import libraries#

from datasets import load_dataset

from fastai.tabular.all import *

from huggingface_hub import from_pretrained_fastai, notebook_login, push_to_hub_fastai

from scipy.interpolate import interp1d

from sklearn.metrics import accuracy_score, auc, roc_auc_score, roc_curve

from sklearn.model_selection import train_test_split

import datasets

# Suppress logs

datasets.logging.set_verbosity_error()

Getting the data#

We will use the 🤗 Datasets library to download and process the datasets that we’ll encounter in this course. 🤗 Datasets provides smart caching and allows you to process larger-than-RAM datasets by exploiting a technique called memory-mapping that provides a mapping between RAM and filesystem storage.

To download the Top Quark Tagging dataset from the Hugging Face Hub, we can use the load_dataset() function:

top_tagging_ds = load_dataset("dl4phys/top_tagging")

If we look inside our top_tagging_ds object

top_tagging_ds

DatasetDict({

train: Dataset({

features: ['E_0', 'PX_0', 'PY_0', 'PZ_0', 'E_1', 'PX_1', 'PY_1', 'PZ_1', 'E_2', 'PX_2', 'PY_2', 'PZ_2', 'E_3', 'PX_3', 'PY_3', 'PZ_3', 'E_4', 'PX_4', 'PY_4', 'PZ_4', 'E_5', 'PX_5', 'PY_5', 'PZ_5', 'E_6', 'PX_6', 'PY_6', 'PZ_6', 'E_7', 'PX_7', 'PY_7', 'PZ_7', 'E_8', 'PX_8', 'PY_8', 'PZ_8', 'E_9', 'PX_9', 'PY_9', 'PZ_9', 'E_10', 'PX_10', 'PY_10', 'PZ_10', 'E_11', 'PX_11', 'PY_11', 'PZ_11', 'E_12', 'PX_12', 'PY_12', 'PZ_12', 'E_13', 'PX_13', 'PY_13', 'PZ_13', 'E_14', 'PX_14', 'PY_14', 'PZ_14', 'E_15', 'PX_15', 'PY_15', 'PZ_15', 'E_16', 'PX_16', 'PY_16', 'PZ_16', 'E_17', 'PX_17', 'PY_17', 'PZ_17', 'E_18', 'PX_18', 'PY_18', 'PZ_18', 'E_19', 'PX_19', 'PY_19', 'PZ_19', 'E_20', 'PX_20', 'PY_20', 'PZ_20', 'E_21', 'PX_21', 'PY_21', 'PZ_21', 'E_22', 'PX_22', 'PY_22', 'PZ_22', 'E_23', 'PX_23', 'PY_23', 'PZ_23', 'E_24', 'PX_24', 'PY_24', 'PZ_24', 'E_25', 'PX_25', 'PY_25', 'PZ_25', 'E_26', 'PX_26', 'PY_26', 'PZ_26', 'E_27', 'PX_27', 'PY_27', 'PZ_27', 'E_28', 'PX_28', 'PY_28', 'PZ_28', 'E_29', 'PX_29', 'PY_29', 'PZ_29', 'E_30', 'PX_30', 'PY_30', 'PZ_30', 'E_31', 'PX_31', 'PY_31', 'PZ_31', 'E_32', 'PX_32', 'PY_32', 'PZ_32', 'E_33', 'PX_33', 'PY_33', 'PZ_33', 'E_34', 'PX_34', 'PY_34', 'PZ_34', 'E_35', 'PX_35', 'PY_35', 'PZ_35', 'E_36', 'PX_36', 'PY_36', 'PZ_36', 'E_37', 'PX_37', 'PY_37', 'PZ_37', 'E_38', 'PX_38', 'PY_38', 'PZ_38', 'E_39', 'PX_39', 'PY_39', 'PZ_39', 'E_40', 'PX_40', 'PY_40', 'PZ_40', 'E_41', 'PX_41', 'PY_41', 'PZ_41', 'E_42', 'PX_42', 'PY_42', 'PZ_42', 'E_43', 'PX_43', 'PY_43', 'PZ_43', 'E_44', 'PX_44', 'PY_44', 'PZ_44', 'E_45', 'PX_45', 'PY_45', 'PZ_45', 'E_46', 'PX_46', 'PY_46', 'PZ_46', 'E_47', 'PX_47', 'PY_47', 'PZ_47', 'E_48', 'PX_48', 'PY_48', 'PZ_48', 'E_49', 'PX_49', 'PY_49', 'PZ_49', 'E_50', 'PX_50', 'PY_50', 'PZ_50', 'E_51', 'PX_51', 'PY_51', 'PZ_51', 'E_52', 'PX_52', 'PY_52', 'PZ_52', 'E_53', 'PX_53', 'PY_53', 'PZ_53', 'E_54', 'PX_54', 'PY_54', 'PZ_54', 'E_55', 'PX_55', 'PY_55', 'PZ_55', 'E_56', 'PX_56', 'PY_56', 'PZ_56', 'E_57', 'PX_57', 'PY_57', 'PZ_57', 'E_58', 'PX_58', 'PY_58', 'PZ_58', 'E_59', 'PX_59', 'PY_59', 'PZ_59', 'E_60', 'PX_60', 'PY_60', 'PZ_60', 'E_61', 'PX_61', 'PY_61', 'PZ_61', 'E_62', 'PX_62', 'PY_62', 'PZ_62', 'E_63', 'PX_63', 'PY_63', 'PZ_63', 'E_64', 'PX_64', 'PY_64', 'PZ_64', 'E_65', 'PX_65', 'PY_65', 'PZ_65', 'E_66', 'PX_66', 'PY_66', 'PZ_66', 'E_67', 'PX_67', 'PY_67', 'PZ_67', 'E_68', 'PX_68', 'PY_68', 'PZ_68', 'E_69', 'PX_69', 'PY_69', 'PZ_69', 'E_70', 'PX_70', 'PY_70', 'PZ_70', 'E_71', 'PX_71', 'PY_71', 'PZ_71', 'E_72', 'PX_72', 'PY_72', 'PZ_72', 'E_73', 'PX_73', 'PY_73', 'PZ_73', 'E_74', 'PX_74', 'PY_74', 'PZ_74', 'E_75', 'PX_75', 'PY_75', 'PZ_75', 'E_76', 'PX_76', 'PY_76', 'PZ_76', 'E_77', 'PX_77', 'PY_77', 'PZ_77', 'E_78', 'PX_78', 'PY_78', 'PZ_78', 'E_79', 'PX_79', 'PY_79', 'PZ_79', 'E_80', 'PX_80', 'PY_80', 'PZ_80', 'E_81', 'PX_81', 'PY_81', 'PZ_81', 'E_82', 'PX_82', 'PY_82', 'PZ_82', 'E_83', 'PX_83', 'PY_83', 'PZ_83', 'E_84', 'PX_84', 'PY_84', 'PZ_84', 'E_85', 'PX_85', 'PY_85', 'PZ_85', 'E_86', 'PX_86', 'PY_86', 'PZ_86', 'E_87', 'PX_87', 'PY_87', 'PZ_87', 'E_88', 'PX_88', 'PY_88', 'PZ_88', 'E_89', 'PX_89', 'PY_89', 'PZ_89', 'E_90', 'PX_90', 'PY_90', 'PZ_90', 'E_91', 'PX_91', 'PY_91', 'PZ_91', 'E_92', 'PX_92', 'PY_92', 'PZ_92', 'E_93', 'PX_93', 'PY_93', 'PZ_93', 'E_94', 'PX_94', 'PY_94', 'PZ_94', 'E_95', 'PX_95', 'PY_95', 'PZ_95', 'E_96', 'PX_96', 'PY_96', 'PZ_96', 'E_97', 'PX_97', 'PY_97', 'PZ_97', 'E_98', 'PX_98', 'PY_98', 'PZ_98', 'E_99', 'PX_99', 'PY_99', 'PZ_99', 'E_100', 'PX_100', 'PY_100', 'PZ_100', 'E_101', 'PX_101', 'PY_101', 'PZ_101', 'E_102', 'PX_102', 'PY_102', 'PZ_102', 'E_103', 'PX_103', 'PY_103', 'PZ_103', 'E_104', 'PX_104', 'PY_104', 'PZ_104', 'E_105', 'PX_105', 'PY_105', 'PZ_105', 'E_106', 'PX_106', 'PY_106', 'PZ_106', 'E_107', 'PX_107', 'PY_107', 'PZ_107', 'E_108', 'PX_108', 'PY_108', 'PZ_108', 'E_109', 'PX_109', 'PY_109', 'PZ_109', 'E_110', 'PX_110', 'PY_110', 'PZ_110', 'E_111', 'PX_111', 'PY_111', 'PZ_111', 'E_112', 'PX_112', 'PY_112', 'PZ_112', 'E_113', 'PX_113', 'PY_113', 'PZ_113', 'E_114', 'PX_114', 'PY_114', 'PZ_114', 'E_115', 'PX_115', 'PY_115', 'PZ_115', 'E_116', 'PX_116', 'PY_116', 'PZ_116', 'E_117', 'PX_117', 'PY_117', 'PZ_117', 'E_118', 'PX_118', 'PY_118', 'PZ_118', 'E_119', 'PX_119', 'PY_119', 'PZ_119', 'E_120', 'PX_120', 'PY_120', 'PZ_120', 'E_121', 'PX_121', 'PY_121', 'PZ_121', 'E_122', 'PX_122', 'PY_122', 'PZ_122', 'E_123', 'PX_123', 'PY_123', 'PZ_123', 'E_124', 'PX_124', 'PY_124', 'PZ_124', 'E_125', 'PX_125', 'PY_125', 'PZ_125', 'E_126', 'PX_126', 'PY_126', 'PZ_126', 'E_127', 'PX_127', 'PY_127', 'PZ_127', 'E_128', 'PX_128', 'PY_128', 'PZ_128', 'E_129', 'PX_129', 'PY_129', 'PZ_129', 'E_130', 'PX_130', 'PY_130', 'PZ_130', 'E_131', 'PX_131', 'PY_131', 'PZ_131', 'E_132', 'PX_132', 'PY_132', 'PZ_132', 'E_133', 'PX_133', 'PY_133', 'PZ_133', 'E_134', 'PX_134', 'PY_134', 'PZ_134', 'E_135', 'PX_135', 'PY_135', 'PZ_135', 'E_136', 'PX_136', 'PY_136', 'PZ_136', 'E_137', 'PX_137', 'PY_137', 'PZ_137', 'E_138', 'PX_138', 'PY_138', 'PZ_138', 'E_139', 'PX_139', 'PY_139', 'PZ_139', 'E_140', 'PX_140', 'PY_140', 'PZ_140', 'E_141', 'PX_141', 'PY_141', 'PZ_141', 'E_142', 'PX_142', 'PY_142', 'PZ_142', 'E_143', 'PX_143', 'PY_143', 'PZ_143', 'E_144', 'PX_144', 'PY_144', 'PZ_144', 'E_145', 'PX_145', 'PY_145', 'PZ_145', 'E_146', 'PX_146', 'PY_146', 'PZ_146', 'E_147', 'PX_147', 'PY_147', 'PZ_147', 'E_148', 'PX_148', 'PY_148', 'PZ_148', 'E_149', 'PX_149', 'PY_149', 'PZ_149', 'E_150', 'PX_150', 'PY_150', 'PZ_150', 'E_151', 'PX_151', 'PY_151', 'PZ_151', 'E_152', 'PX_152', 'PY_152', 'PZ_152', 'E_153', 'PX_153', 'PY_153', 'PZ_153', 'E_154', 'PX_154', 'PY_154', 'PZ_154', 'E_155', 'PX_155', 'PY_155', 'PZ_155', 'E_156', 'PX_156', 'PY_156', 'PZ_156', 'E_157', 'PX_157', 'PY_157', 'PZ_157', 'E_158', 'PX_158', 'PY_158', 'PZ_158', 'E_159', 'PX_159', 'PY_159', 'PZ_159', 'E_160', 'PX_160', 'PY_160', 'PZ_160', 'E_161', 'PX_161', 'PY_161', 'PZ_161', 'E_162', 'PX_162', 'PY_162', 'PZ_162', 'E_163', 'PX_163', 'PY_163', 'PZ_163', 'E_164', 'PX_164', 'PY_164', 'PZ_164', 'E_165', 'PX_165', 'PY_165', 'PZ_165', 'E_166', 'PX_166', 'PY_166', 'PZ_166', 'E_167', 'PX_167', 'PY_167', 'PZ_167', 'E_168', 'PX_168', 'PY_168', 'PZ_168', 'E_169', 'PX_169', 'PY_169', 'PZ_169', 'E_170', 'PX_170', 'PY_170', 'PZ_170', 'E_171', 'PX_171', 'PY_171', 'PZ_171', 'E_172', 'PX_172', 'PY_172', 'PZ_172', 'E_173', 'PX_173', 'PY_173', 'PZ_173', 'E_174', 'PX_174', 'PY_174', 'PZ_174', 'E_175', 'PX_175', 'PY_175', 'PZ_175', 'E_176', 'PX_176', 'PY_176', 'PZ_176', 'E_177', 'PX_177', 'PY_177', 'PZ_177', 'E_178', 'PX_178', 'PY_178', 'PZ_178', 'E_179', 'PX_179', 'PY_179', 'PZ_179', 'E_180', 'PX_180', 'PY_180', 'PZ_180', 'E_181', 'PX_181', 'PY_181', 'PZ_181', 'E_182', 'PX_182', 'PY_182', 'PZ_182', 'E_183', 'PX_183', 'PY_183', 'PZ_183', 'E_184', 'PX_184', 'PY_184', 'PZ_184', 'E_185', 'PX_185', 'PY_185', 'PZ_185', 'E_186', 'PX_186', 'PY_186', 'PZ_186', 'E_187', 'PX_187', 'PY_187', 'PZ_187', 'E_188', 'PX_188', 'PY_188', 'PZ_188', 'E_189', 'PX_189', 'PY_189', 'PZ_189', 'E_190', 'PX_190', 'PY_190', 'PZ_190', 'E_191', 'PX_191', 'PY_191', 'PZ_191', 'E_192', 'PX_192', 'PY_192', 'PZ_192', 'E_193', 'PX_193', 'PY_193', 'PZ_193', 'E_194', 'PX_194', 'PY_194', 'PZ_194', 'E_195', 'PX_195', 'PY_195', 'PZ_195', 'E_196', 'PX_196', 'PY_196', 'PZ_196', 'E_197', 'PX_197', 'PY_197', 'PZ_197', 'E_198', 'PX_198', 'PY_198', 'PZ_198', 'E_199', 'PX_199', 'PY_199', 'PZ_199', 'truthE', 'truthPX', 'truthPY', 'truthPZ', 'ttv', 'is_signal_new'],

num_rows: 1211000

})

test: Dataset({

features: ['E_0', 'PX_0', 'PY_0', 'PZ_0', 'E_1', 'PX_1', 'PY_1', 'PZ_1', 'E_2', 'PX_2', 'PY_2', 'PZ_2', 'E_3', 'PX_3', 'PY_3', 'PZ_3', 'E_4', 'PX_4', 'PY_4', 'PZ_4', 'E_5', 'PX_5', 'PY_5', 'PZ_5', 'E_6', 'PX_6', 'PY_6', 'PZ_6', 'E_7', 'PX_7', 'PY_7', 'PZ_7', 'E_8', 'PX_8', 'PY_8', 'PZ_8', 'E_9', 'PX_9', 'PY_9', 'PZ_9', 'E_10', 'PX_10', 'PY_10', 'PZ_10', 'E_11', 'PX_11', 'PY_11', 'PZ_11', 'E_12', 'PX_12', 'PY_12', 'PZ_12', 'E_13', 'PX_13', 'PY_13', 'PZ_13', 'E_14', 'PX_14', 'PY_14', 'PZ_14', 'E_15', 'PX_15', 'PY_15', 'PZ_15', 'E_16', 'PX_16', 'PY_16', 'PZ_16', 'E_17', 'PX_17', 'PY_17', 'PZ_17', 'E_18', 'PX_18', 'PY_18', 'PZ_18', 'E_19', 'PX_19', 'PY_19', 'PZ_19', 'E_20', 'PX_20', 'PY_20', 'PZ_20', 'E_21', 'PX_21', 'PY_21', 'PZ_21', 'E_22', 'PX_22', 'PY_22', 'PZ_22', 'E_23', 'PX_23', 'PY_23', 'PZ_23', 'E_24', 'PX_24', 'PY_24', 'PZ_24', 'E_25', 'PX_25', 'PY_25', 'PZ_25', 'E_26', 'PX_26', 'PY_26', 'PZ_26', 'E_27', 'PX_27', 'PY_27', 'PZ_27', 'E_28', 'PX_28', 'PY_28', 'PZ_28', 'E_29', 'PX_29', 'PY_29', 'PZ_29', 'E_30', 'PX_30', 'PY_30', 'PZ_30', 'E_31', 'PX_31', 'PY_31', 'PZ_31', 'E_32', 'PX_32', 'PY_32', 'PZ_32', 'E_33', 'PX_33', 'PY_33', 'PZ_33', 'E_34', 'PX_34', 'PY_34', 'PZ_34', 'E_35', 'PX_35', 'PY_35', 'PZ_35', 'E_36', 'PX_36', 'PY_36', 'PZ_36', 'E_37', 'PX_37', 'PY_37', 'PZ_37', 'E_38', 'PX_38', 'PY_38', 'PZ_38', 'E_39', 'PX_39', 'PY_39', 'PZ_39', 'E_40', 'PX_40', 'PY_40', 'PZ_40', 'E_41', 'PX_41', 'PY_41', 'PZ_41', 'E_42', 'PX_42', 'PY_42', 'PZ_42', 'E_43', 'PX_43', 'PY_43', 'PZ_43', 'E_44', 'PX_44', 'PY_44', 'PZ_44', 'E_45', 'PX_45', 'PY_45', 'PZ_45', 'E_46', 'PX_46', 'PY_46', 'PZ_46', 'E_47', 'PX_47', 'PY_47', 'PZ_47', 'E_48', 'PX_48', 'PY_48', 'PZ_48', 'E_49', 'PX_49', 'PY_49', 'PZ_49', 'E_50', 'PX_50', 'PY_50', 'PZ_50', 'E_51', 'PX_51', 'PY_51', 'PZ_51', 'E_52', 'PX_52', 'PY_52', 'PZ_52', 'E_53', 'PX_53', 'PY_53', 'PZ_53', 'E_54', 'PX_54', 'PY_54', 'PZ_54', 'E_55', 'PX_55', 'PY_55', 'PZ_55', 'E_56', 'PX_56', 'PY_56', 'PZ_56', 'E_57', 'PX_57', 'PY_57', 'PZ_57', 'E_58', 'PX_58', 'PY_58', 'PZ_58', 'E_59', 'PX_59', 'PY_59', 'PZ_59', 'E_60', 'PX_60', 'PY_60', 'PZ_60', 'E_61', 'PX_61', 'PY_61', 'PZ_61', 'E_62', 'PX_62', 'PY_62', 'PZ_62', 'E_63', 'PX_63', 'PY_63', 'PZ_63', 'E_64', 'PX_64', 'PY_64', 'PZ_64', 'E_65', 'PX_65', 'PY_65', 'PZ_65', 'E_66', 'PX_66', 'PY_66', 'PZ_66', 'E_67', 'PX_67', 'PY_67', 'PZ_67', 'E_68', 'PX_68', 'PY_68', 'PZ_68', 'E_69', 'PX_69', 'PY_69', 'PZ_69', 'E_70', 'PX_70', 'PY_70', 'PZ_70', 'E_71', 'PX_71', 'PY_71', 'PZ_71', 'E_72', 'PX_72', 'PY_72', 'PZ_72', 'E_73', 'PX_73', 'PY_73', 'PZ_73', 'E_74', 'PX_74', 'PY_74', 'PZ_74', 'E_75', 'PX_75', 'PY_75', 'PZ_75', 'E_76', 'PX_76', 'PY_76', 'PZ_76', 'E_77', 'PX_77', 'PY_77', 'PZ_77', 'E_78', 'PX_78', 'PY_78', 'PZ_78', 'E_79', 'PX_79', 'PY_79', 'PZ_79', 'E_80', 'PX_80', 'PY_80', 'PZ_80', 'E_81', 'PX_81', 'PY_81', 'PZ_81', 'E_82', 'PX_82', 'PY_82', 'PZ_82', 'E_83', 'PX_83', 'PY_83', 'PZ_83', 'E_84', 'PX_84', 'PY_84', 'PZ_84', 'E_85', 'PX_85', 'PY_85', 'PZ_85', 'E_86', 'PX_86', 'PY_86', 'PZ_86', 'E_87', 'PX_87', 'PY_87', 'PZ_87', 'E_88', 'PX_88', 'PY_88', 'PZ_88', 'E_89', 'PX_89', 'PY_89', 'PZ_89', 'E_90', 'PX_90', 'PY_90', 'PZ_90', 'E_91', 'PX_91', 'PY_91', 'PZ_91', 'E_92', 'PX_92', 'PY_92', 'PZ_92', 'E_93', 'PX_93', 'PY_93', 'PZ_93', 'E_94', 'PX_94', 'PY_94', 'PZ_94', 'E_95', 'PX_95', 'PY_95', 'PZ_95', 'E_96', 'PX_96', 'PY_96', 'PZ_96', 'E_97', 'PX_97', 'PY_97', 'PZ_97', 'E_98', 'PX_98', 'PY_98', 'PZ_98', 'E_99', 'PX_99', 'PY_99', 'PZ_99', 'E_100', 'PX_100', 'PY_100', 'PZ_100', 'E_101', 'PX_101', 'PY_101', 'PZ_101', 'E_102', 'PX_102', 'PY_102', 'PZ_102', 'E_103', 'PX_103', 'PY_103', 'PZ_103', 'E_104', 'PX_104', 'PY_104', 'PZ_104', 'E_105', 'PX_105', 'PY_105', 'PZ_105', 'E_106', 'PX_106', 'PY_106', 'PZ_106', 'E_107', 'PX_107', 'PY_107', 'PZ_107', 'E_108', 'PX_108', 'PY_108', 'PZ_108', 'E_109', 'PX_109', 'PY_109', 'PZ_109', 'E_110', 'PX_110', 'PY_110', 'PZ_110', 'E_111', 'PX_111', 'PY_111', 'PZ_111', 'E_112', 'PX_112', 'PY_112', 'PZ_112', 'E_113', 'PX_113', 'PY_113', 'PZ_113', 'E_114', 'PX_114', 'PY_114', 'PZ_114', 'E_115', 'PX_115', 'PY_115', 'PZ_115', 'E_116', 'PX_116', 'PY_116', 'PZ_116', 'E_117', 'PX_117', 'PY_117', 'PZ_117', 'E_118', 'PX_118', 'PY_118', 'PZ_118', 'E_119', 'PX_119', 'PY_119', 'PZ_119', 'E_120', 'PX_120', 'PY_120', 'PZ_120', 'E_121', 'PX_121', 'PY_121', 'PZ_121', 'E_122', 'PX_122', 'PY_122', 'PZ_122', 'E_123', 'PX_123', 'PY_123', 'PZ_123', 'E_124', 'PX_124', 'PY_124', 'PZ_124', 'E_125', 'PX_125', 'PY_125', 'PZ_125', 'E_126', 'PX_126', 'PY_126', 'PZ_126', 'E_127', 'PX_127', 'PY_127', 'PZ_127', 'E_128', 'PX_128', 'PY_128', 'PZ_128', 'E_129', 'PX_129', 'PY_129', 'PZ_129', 'E_130', 'PX_130', 'PY_130', 'PZ_130', 'E_131', 'PX_131', 'PY_131', 'PZ_131', 'E_132', 'PX_132', 'PY_132', 'PZ_132', 'E_133', 'PX_133', 'PY_133', 'PZ_133', 'E_134', 'PX_134', 'PY_134', 'PZ_134', 'E_135', 'PX_135', 'PY_135', 'PZ_135', 'E_136', 'PX_136', 'PY_136', 'PZ_136', 'E_137', 'PX_137', 'PY_137', 'PZ_137', 'E_138', 'PX_138', 'PY_138', 'PZ_138', 'E_139', 'PX_139', 'PY_139', 'PZ_139', 'E_140', 'PX_140', 'PY_140', 'PZ_140', 'E_141', 'PX_141', 'PY_141', 'PZ_141', 'E_142', 'PX_142', 'PY_142', 'PZ_142', 'E_143', 'PX_143', 'PY_143', 'PZ_143', 'E_144', 'PX_144', 'PY_144', 'PZ_144', 'E_145', 'PX_145', 'PY_145', 'PZ_145', 'E_146', 'PX_146', 'PY_146', 'PZ_146', 'E_147', 'PX_147', 'PY_147', 'PZ_147', 'E_148', 'PX_148', 'PY_148', 'PZ_148', 'E_149', 'PX_149', 'PY_149', 'PZ_149', 'E_150', 'PX_150', 'PY_150', 'PZ_150', 'E_151', 'PX_151', 'PY_151', 'PZ_151', 'E_152', 'PX_152', 'PY_152', 'PZ_152', 'E_153', 'PX_153', 'PY_153', 'PZ_153', 'E_154', 'PX_154', 'PY_154', 'PZ_154', 'E_155', 'PX_155', 'PY_155', 'PZ_155', 'E_156', 'PX_156', 'PY_156', 'PZ_156', 'E_157', 'PX_157', 'PY_157', 'PZ_157', 'E_158', 'PX_158', 'PY_158', 'PZ_158', 'E_159', 'PX_159', 'PY_159', 'PZ_159', 'E_160', 'PX_160', 'PY_160', 'PZ_160', 'E_161', 'PX_161', 'PY_161', 'PZ_161', 'E_162', 'PX_162', 'PY_162', 'PZ_162', 'E_163', 'PX_163', 'PY_163', 'PZ_163', 'E_164', 'PX_164', 'PY_164', 'PZ_164', 'E_165', 'PX_165', 'PY_165', 'PZ_165', 'E_166', 'PX_166', 'PY_166', 'PZ_166', 'E_167', 'PX_167', 'PY_167', 'PZ_167', 'E_168', 'PX_168', 'PY_168', 'PZ_168', 'E_169', 'PX_169', 'PY_169', 'PZ_169', 'E_170', 'PX_170', 'PY_170', 'PZ_170', 'E_171', 'PX_171', 'PY_171', 'PZ_171', 'E_172', 'PX_172', 'PY_172', 'PZ_172', 'E_173', 'PX_173', 'PY_173', 'PZ_173', 'E_174', 'PX_174', 'PY_174', 'PZ_174', 'E_175', 'PX_175', 'PY_175', 'PZ_175', 'E_176', 'PX_176', 'PY_176', 'PZ_176', 'E_177', 'PX_177', 'PY_177', 'PZ_177', 'E_178', 'PX_178', 'PY_178', 'PZ_178', 'E_179', 'PX_179', 'PY_179', 'PZ_179', 'E_180', 'PX_180', 'PY_180', 'PZ_180', 'E_181', 'PX_181', 'PY_181', 'PZ_181', 'E_182', 'PX_182', 'PY_182', 'PZ_182', 'E_183', 'PX_183', 'PY_183', 'PZ_183', 'E_184', 'PX_184', 'PY_184', 'PZ_184', 'E_185', 'PX_185', 'PY_185', 'PZ_185', 'E_186', 'PX_186', 'PY_186', 'PZ_186', 'E_187', 'PX_187', 'PY_187', 'PZ_187', 'E_188', 'PX_188', 'PY_188', 'PZ_188', 'E_189', 'PX_189', 'PY_189', 'PZ_189', 'E_190', 'PX_190', 'PY_190', 'PZ_190', 'E_191', 'PX_191', 'PY_191', 'PZ_191', 'E_192', 'PX_192', 'PY_192', 'PZ_192', 'E_193', 'PX_193', 'PY_193', 'PZ_193', 'E_194', 'PX_194', 'PY_194', 'PZ_194', 'E_195', 'PX_195', 'PY_195', 'PZ_195', 'E_196', 'PX_196', 'PY_196', 'PZ_196', 'E_197', 'PX_197', 'PY_197', 'PZ_197', 'E_198', 'PX_198', 'PY_198', 'PZ_198', 'E_199', 'PX_199', 'PY_199', 'PZ_199', 'truthE', 'truthPX', 'truthPY', 'truthPZ', 'ttv', 'is_signal_new'],

num_rows: 404000

})

validation: Dataset({

features: ['E_0', 'PX_0', 'PY_0', 'PZ_0', 'E_1', 'PX_1', 'PY_1', 'PZ_1', 'E_2', 'PX_2', 'PY_2', 'PZ_2', 'E_3', 'PX_3', 'PY_3', 'PZ_3', 'E_4', 'PX_4', 'PY_4', 'PZ_4', 'E_5', 'PX_5', 'PY_5', 'PZ_5', 'E_6', 'PX_6', 'PY_6', 'PZ_6', 'E_7', 'PX_7', 'PY_7', 'PZ_7', 'E_8', 'PX_8', 'PY_8', 'PZ_8', 'E_9', 'PX_9', 'PY_9', 'PZ_9', 'E_10', 'PX_10', 'PY_10', 'PZ_10', 'E_11', 'PX_11', 'PY_11', 'PZ_11', 'E_12', 'PX_12', 'PY_12', 'PZ_12', 'E_13', 'PX_13', 'PY_13', 'PZ_13', 'E_14', 'PX_14', 'PY_14', 'PZ_14', 'E_15', 'PX_15', 'PY_15', 'PZ_15', 'E_16', 'PX_16', 'PY_16', 'PZ_16', 'E_17', 'PX_17', 'PY_17', 'PZ_17', 'E_18', 'PX_18', 'PY_18', 'PZ_18', 'E_19', 'PX_19', 'PY_19', 'PZ_19', 'E_20', 'PX_20', 'PY_20', 'PZ_20', 'E_21', 'PX_21', 'PY_21', 'PZ_21', 'E_22', 'PX_22', 'PY_22', 'PZ_22', 'E_23', 'PX_23', 'PY_23', 'PZ_23', 'E_24', 'PX_24', 'PY_24', 'PZ_24', 'E_25', 'PX_25', 'PY_25', 'PZ_25', 'E_26', 'PX_26', 'PY_26', 'PZ_26', 'E_27', 'PX_27', 'PY_27', 'PZ_27', 'E_28', 'PX_28', 'PY_28', 'PZ_28', 'E_29', 'PX_29', 'PY_29', 'PZ_29', 'E_30', 'PX_30', 'PY_30', 'PZ_30', 'E_31', 'PX_31', 'PY_31', 'PZ_31', 'E_32', 'PX_32', 'PY_32', 'PZ_32', 'E_33', 'PX_33', 'PY_33', 'PZ_33', 'E_34', 'PX_34', 'PY_34', 'PZ_34', 'E_35', 'PX_35', 'PY_35', 'PZ_35', 'E_36', 'PX_36', 'PY_36', 'PZ_36', 'E_37', 'PX_37', 'PY_37', 'PZ_37', 'E_38', 'PX_38', 'PY_38', 'PZ_38', 'E_39', 'PX_39', 'PY_39', 'PZ_39', 'E_40', 'PX_40', 'PY_40', 'PZ_40', 'E_41', 'PX_41', 'PY_41', 'PZ_41', 'E_42', 'PX_42', 'PY_42', 'PZ_42', 'E_43', 'PX_43', 'PY_43', 'PZ_43', 'E_44', 'PX_44', 'PY_44', 'PZ_44', 'E_45', 'PX_45', 'PY_45', 'PZ_45', 'E_46', 'PX_46', 'PY_46', 'PZ_46', 'E_47', 'PX_47', 'PY_47', 'PZ_47', 'E_48', 'PX_48', 'PY_48', 'PZ_48', 'E_49', 'PX_49', 'PY_49', 'PZ_49', 'E_50', 'PX_50', 'PY_50', 'PZ_50', 'E_51', 'PX_51', 'PY_51', 'PZ_51', 'E_52', 'PX_52', 'PY_52', 'PZ_52', 'E_53', 'PX_53', 'PY_53', 'PZ_53', 'E_54', 'PX_54', 'PY_54', 'PZ_54', 'E_55', 'PX_55', 'PY_55', 'PZ_55', 'E_56', 'PX_56', 'PY_56', 'PZ_56', 'E_57', 'PX_57', 'PY_57', 'PZ_57', 'E_58', 'PX_58', 'PY_58', 'PZ_58', 'E_59', 'PX_59', 'PY_59', 'PZ_59', 'E_60', 'PX_60', 'PY_60', 'PZ_60', 'E_61', 'PX_61', 'PY_61', 'PZ_61', 'E_62', 'PX_62', 'PY_62', 'PZ_62', 'E_63', 'PX_63', 'PY_63', 'PZ_63', 'E_64', 'PX_64', 'PY_64', 'PZ_64', 'E_65', 'PX_65', 'PY_65', 'PZ_65', 'E_66', 'PX_66', 'PY_66', 'PZ_66', 'E_67', 'PX_67', 'PY_67', 'PZ_67', 'E_68', 'PX_68', 'PY_68', 'PZ_68', 'E_69', 'PX_69', 'PY_69', 'PZ_69', 'E_70', 'PX_70', 'PY_70', 'PZ_70', 'E_71', 'PX_71', 'PY_71', 'PZ_71', 'E_72', 'PX_72', 'PY_72', 'PZ_72', 'E_73', 'PX_73', 'PY_73', 'PZ_73', 'E_74', 'PX_74', 'PY_74', 'PZ_74', 'E_75', 'PX_75', 'PY_75', 'PZ_75', 'E_76', 'PX_76', 'PY_76', 'PZ_76', 'E_77', 'PX_77', 'PY_77', 'PZ_77', 'E_78', 'PX_78', 'PY_78', 'PZ_78', 'E_79', 'PX_79', 'PY_79', 'PZ_79', 'E_80', 'PX_80', 'PY_80', 'PZ_80', 'E_81', 'PX_81', 'PY_81', 'PZ_81', 'E_82', 'PX_82', 'PY_82', 'PZ_82', 'E_83', 'PX_83', 'PY_83', 'PZ_83', 'E_84', 'PX_84', 'PY_84', 'PZ_84', 'E_85', 'PX_85', 'PY_85', 'PZ_85', 'E_86', 'PX_86', 'PY_86', 'PZ_86', 'E_87', 'PX_87', 'PY_87', 'PZ_87', 'E_88', 'PX_88', 'PY_88', 'PZ_88', 'E_89', 'PX_89', 'PY_89', 'PZ_89', 'E_90', 'PX_90', 'PY_90', 'PZ_90', 'E_91', 'PX_91', 'PY_91', 'PZ_91', 'E_92', 'PX_92', 'PY_92', 'PZ_92', 'E_93', 'PX_93', 'PY_93', 'PZ_93', 'E_94', 'PX_94', 'PY_94', 'PZ_94', 'E_95', 'PX_95', 'PY_95', 'PZ_95', 'E_96', 'PX_96', 'PY_96', 'PZ_96', 'E_97', 'PX_97', 'PY_97', 'PZ_97', 'E_98', 'PX_98', 'PY_98', 'PZ_98', 'E_99', 'PX_99', 'PY_99', 'PZ_99', 'E_100', 'PX_100', 'PY_100', 'PZ_100', 'E_101', 'PX_101', 'PY_101', 'PZ_101', 'E_102', 'PX_102', 'PY_102', 'PZ_102', 'E_103', 'PX_103', 'PY_103', 'PZ_103', 'E_104', 'PX_104', 'PY_104', 'PZ_104', 'E_105', 'PX_105', 'PY_105', 'PZ_105', 'E_106', 'PX_106', 'PY_106', 'PZ_106', 'E_107', 'PX_107', 'PY_107', 'PZ_107', 'E_108', 'PX_108', 'PY_108', 'PZ_108', 'E_109', 'PX_109', 'PY_109', 'PZ_109', 'E_110', 'PX_110', 'PY_110', 'PZ_110', 'E_111', 'PX_111', 'PY_111', 'PZ_111', 'E_112', 'PX_112', 'PY_112', 'PZ_112', 'E_113', 'PX_113', 'PY_113', 'PZ_113', 'E_114', 'PX_114', 'PY_114', 'PZ_114', 'E_115', 'PX_115', 'PY_115', 'PZ_115', 'E_116', 'PX_116', 'PY_116', 'PZ_116', 'E_117', 'PX_117', 'PY_117', 'PZ_117', 'E_118', 'PX_118', 'PY_118', 'PZ_118', 'E_119', 'PX_119', 'PY_119', 'PZ_119', 'E_120', 'PX_120', 'PY_120', 'PZ_120', 'E_121', 'PX_121', 'PY_121', 'PZ_121', 'E_122', 'PX_122', 'PY_122', 'PZ_122', 'E_123', 'PX_123', 'PY_123', 'PZ_123', 'E_124', 'PX_124', 'PY_124', 'PZ_124', 'E_125', 'PX_125', 'PY_125', 'PZ_125', 'E_126', 'PX_126', 'PY_126', 'PZ_126', 'E_127', 'PX_127', 'PY_127', 'PZ_127', 'E_128', 'PX_128', 'PY_128', 'PZ_128', 'E_129', 'PX_129', 'PY_129', 'PZ_129', 'E_130', 'PX_130', 'PY_130', 'PZ_130', 'E_131', 'PX_131', 'PY_131', 'PZ_131', 'E_132', 'PX_132', 'PY_132', 'PZ_132', 'E_133', 'PX_133', 'PY_133', 'PZ_133', 'E_134', 'PX_134', 'PY_134', 'PZ_134', 'E_135', 'PX_135', 'PY_135', 'PZ_135', 'E_136', 'PX_136', 'PY_136', 'PZ_136', 'E_137', 'PX_137', 'PY_137', 'PZ_137', 'E_138', 'PX_138', 'PY_138', 'PZ_138', 'E_139', 'PX_139', 'PY_139', 'PZ_139', 'E_140', 'PX_140', 'PY_140', 'PZ_140', 'E_141', 'PX_141', 'PY_141', 'PZ_141', 'E_142', 'PX_142', 'PY_142', 'PZ_142', 'E_143', 'PX_143', 'PY_143', 'PZ_143', 'E_144', 'PX_144', 'PY_144', 'PZ_144', 'E_145', 'PX_145', 'PY_145', 'PZ_145', 'E_146', 'PX_146', 'PY_146', 'PZ_146', 'E_147', 'PX_147', 'PY_147', 'PZ_147', 'E_148', 'PX_148', 'PY_148', 'PZ_148', 'E_149', 'PX_149', 'PY_149', 'PZ_149', 'E_150', 'PX_150', 'PY_150', 'PZ_150', 'E_151', 'PX_151', 'PY_151', 'PZ_151', 'E_152', 'PX_152', 'PY_152', 'PZ_152', 'E_153', 'PX_153', 'PY_153', 'PZ_153', 'E_154', 'PX_154', 'PY_154', 'PZ_154', 'E_155', 'PX_155', 'PY_155', 'PZ_155', 'E_156', 'PX_156', 'PY_156', 'PZ_156', 'E_157', 'PX_157', 'PY_157', 'PZ_157', 'E_158', 'PX_158', 'PY_158', 'PZ_158', 'E_159', 'PX_159', 'PY_159', 'PZ_159', 'E_160', 'PX_160', 'PY_160', 'PZ_160', 'E_161', 'PX_161', 'PY_161', 'PZ_161', 'E_162', 'PX_162', 'PY_162', 'PZ_162', 'E_163', 'PX_163', 'PY_163', 'PZ_163', 'E_164', 'PX_164', 'PY_164', 'PZ_164', 'E_165', 'PX_165', 'PY_165', 'PZ_165', 'E_166', 'PX_166', 'PY_166', 'PZ_166', 'E_167', 'PX_167', 'PY_167', 'PZ_167', 'E_168', 'PX_168', 'PY_168', 'PZ_168', 'E_169', 'PX_169', 'PY_169', 'PZ_169', 'E_170', 'PX_170', 'PY_170', 'PZ_170', 'E_171', 'PX_171', 'PY_171', 'PZ_171', 'E_172', 'PX_172', 'PY_172', 'PZ_172', 'E_173', 'PX_173', 'PY_173', 'PZ_173', 'E_174', 'PX_174', 'PY_174', 'PZ_174', 'E_175', 'PX_175', 'PY_175', 'PZ_175', 'E_176', 'PX_176', 'PY_176', 'PZ_176', 'E_177', 'PX_177', 'PY_177', 'PZ_177', 'E_178', 'PX_178', 'PY_178', 'PZ_178', 'E_179', 'PX_179', 'PY_179', 'PZ_179', 'E_180', 'PX_180', 'PY_180', 'PZ_180', 'E_181', 'PX_181', 'PY_181', 'PZ_181', 'E_182', 'PX_182', 'PY_182', 'PZ_182', 'E_183', 'PX_183', 'PY_183', 'PZ_183', 'E_184', 'PX_184', 'PY_184', 'PZ_184', 'E_185', 'PX_185', 'PY_185', 'PZ_185', 'E_186', 'PX_186', 'PY_186', 'PZ_186', 'E_187', 'PX_187', 'PY_187', 'PZ_187', 'E_188', 'PX_188', 'PY_188', 'PZ_188', 'E_189', 'PX_189', 'PY_189', 'PZ_189', 'E_190', 'PX_190', 'PY_190', 'PZ_190', 'E_191', 'PX_191', 'PY_191', 'PZ_191', 'E_192', 'PX_192', 'PY_192', 'PZ_192', 'E_193', 'PX_193', 'PY_193', 'PZ_193', 'E_194', 'PX_194', 'PY_194', 'PZ_194', 'E_195', 'PX_195', 'PY_195', 'PZ_195', 'E_196', 'PX_196', 'PY_196', 'PZ_196', 'E_197', 'PX_197', 'PY_197', 'PZ_197', 'E_198', 'PX_198', 'PY_198', 'PZ_198', 'E_199', 'PX_199', 'PY_199', 'PZ_199', 'truthE', 'truthPX', 'truthPY', 'truthPZ', 'ttv', 'is_signal_new'],

num_rows: 403000

})

})

we see it is similar to a Python dictionary, with each key corresponding to a different split. And we can use the usual dictionary syntax to access an individual split:

top_tagging_ds["train"]

Dataset({

features: ['E_0', 'PX_0', 'PY_0', 'PZ_0', 'E_1', 'PX_1', 'PY_1', 'PZ_1', 'E_2', 'PX_2', 'PY_2', 'PZ_2', 'E_3', 'PX_3', 'PY_3', 'PZ_3', 'E_4', 'PX_4', 'PY_4', 'PZ_4', 'E_5', 'PX_5', 'PY_5', 'PZ_5', 'E_6', 'PX_6', 'PY_6', 'PZ_6', 'E_7', 'PX_7', 'PY_7', 'PZ_7', 'E_8', 'PX_8', 'PY_8', 'PZ_8', 'E_9', 'PX_9', 'PY_9', 'PZ_9', 'E_10', 'PX_10', 'PY_10', 'PZ_10', 'E_11', 'PX_11', 'PY_11', 'PZ_11', 'E_12', 'PX_12', 'PY_12', 'PZ_12', 'E_13', 'PX_13', 'PY_13', 'PZ_13', 'E_14', 'PX_14', 'PY_14', 'PZ_14', 'E_15', 'PX_15', 'PY_15', 'PZ_15', 'E_16', 'PX_16', 'PY_16', 'PZ_16', 'E_17', 'PX_17', 'PY_17', 'PZ_17', 'E_18', 'PX_18', 'PY_18', 'PZ_18', 'E_19', 'PX_19', 'PY_19', 'PZ_19', 'E_20', 'PX_20', 'PY_20', 'PZ_20', 'E_21', 'PX_21', 'PY_21', 'PZ_21', 'E_22', 'PX_22', 'PY_22', 'PZ_22', 'E_23', 'PX_23', 'PY_23', 'PZ_23', 'E_24', 'PX_24', 'PY_24', 'PZ_24', 'E_25', 'PX_25', 'PY_25', 'PZ_25', 'E_26', 'PX_26', 'PY_26', 'PZ_26', 'E_27', 'PX_27', 'PY_27', 'PZ_27', 'E_28', 'PX_28', 'PY_28', 'PZ_28', 'E_29', 'PX_29', 'PY_29', 'PZ_29', 'E_30', 'PX_30', 'PY_30', 'PZ_30', 'E_31', 'PX_31', 'PY_31', 'PZ_31', 'E_32', 'PX_32', 'PY_32', 'PZ_32', 'E_33', 'PX_33', 'PY_33', 'PZ_33', 'E_34', 'PX_34', 'PY_34', 'PZ_34', 'E_35', 'PX_35', 'PY_35', 'PZ_35', 'E_36', 'PX_36', 'PY_36', 'PZ_36', 'E_37', 'PX_37', 'PY_37', 'PZ_37', 'E_38', 'PX_38', 'PY_38', 'PZ_38', 'E_39', 'PX_39', 'PY_39', 'PZ_39', 'E_40', 'PX_40', 'PY_40', 'PZ_40', 'E_41', 'PX_41', 'PY_41', 'PZ_41', 'E_42', 'PX_42', 'PY_42', 'PZ_42', 'E_43', 'PX_43', 'PY_43', 'PZ_43', 'E_44', 'PX_44', 'PY_44', 'PZ_44', 'E_45', 'PX_45', 'PY_45', 'PZ_45', 'E_46', 'PX_46', 'PY_46', 'PZ_46', 'E_47', 'PX_47', 'PY_47', 'PZ_47', 'E_48', 'PX_48', 'PY_48', 'PZ_48', 'E_49', 'PX_49', 'PY_49', 'PZ_49', 'E_50', 'PX_50', 'PY_50', 'PZ_50', 'E_51', 'PX_51', 'PY_51', 'PZ_51', 'E_52', 'PX_52', 'PY_52', 'PZ_52', 'E_53', 'PX_53', 'PY_53', 'PZ_53', 'E_54', 'PX_54', 'PY_54', 'PZ_54', 'E_55', 'PX_55', 'PY_55', 'PZ_55', 'E_56', 'PX_56', 'PY_56', 'PZ_56', 'E_57', 'PX_57', 'PY_57', 'PZ_57', 'E_58', 'PX_58', 'PY_58', 'PZ_58', 'E_59', 'PX_59', 'PY_59', 'PZ_59', 'E_60', 'PX_60', 'PY_60', 'PZ_60', 'E_61', 'PX_61', 'PY_61', 'PZ_61', 'E_62', 'PX_62', 'PY_62', 'PZ_62', 'E_63', 'PX_63', 'PY_63', 'PZ_63', 'E_64', 'PX_64', 'PY_64', 'PZ_64', 'E_65', 'PX_65', 'PY_65', 'PZ_65', 'E_66', 'PX_66', 'PY_66', 'PZ_66', 'E_67', 'PX_67', 'PY_67', 'PZ_67', 'E_68', 'PX_68', 'PY_68', 'PZ_68', 'E_69', 'PX_69', 'PY_69', 'PZ_69', 'E_70', 'PX_70', 'PY_70', 'PZ_70', 'E_71', 'PX_71', 'PY_71', 'PZ_71', 'E_72', 'PX_72', 'PY_72', 'PZ_72', 'E_73', 'PX_73', 'PY_73', 'PZ_73', 'E_74', 'PX_74', 'PY_74', 'PZ_74', 'E_75', 'PX_75', 'PY_75', 'PZ_75', 'E_76', 'PX_76', 'PY_76', 'PZ_76', 'E_77', 'PX_77', 'PY_77', 'PZ_77', 'E_78', 'PX_78', 'PY_78', 'PZ_78', 'E_79', 'PX_79', 'PY_79', 'PZ_79', 'E_80', 'PX_80', 'PY_80', 'PZ_80', 'E_81', 'PX_81', 'PY_81', 'PZ_81', 'E_82', 'PX_82', 'PY_82', 'PZ_82', 'E_83', 'PX_83', 'PY_83', 'PZ_83', 'E_84', 'PX_84', 'PY_84', 'PZ_84', 'E_85', 'PX_85', 'PY_85', 'PZ_85', 'E_86', 'PX_86', 'PY_86', 'PZ_86', 'E_87', 'PX_87', 'PY_87', 'PZ_87', 'E_88', 'PX_88', 'PY_88', 'PZ_88', 'E_89', 'PX_89', 'PY_89', 'PZ_89', 'E_90', 'PX_90', 'PY_90', 'PZ_90', 'E_91', 'PX_91', 'PY_91', 'PZ_91', 'E_92', 'PX_92', 'PY_92', 'PZ_92', 'E_93', 'PX_93', 'PY_93', 'PZ_93', 'E_94', 'PX_94', 'PY_94', 'PZ_94', 'E_95', 'PX_95', 'PY_95', 'PZ_95', 'E_96', 'PX_96', 'PY_96', 'PZ_96', 'E_97', 'PX_97', 'PY_97', 'PZ_97', 'E_98', 'PX_98', 'PY_98', 'PZ_98', 'E_99', 'PX_99', 'PY_99', 'PZ_99', 'E_100', 'PX_100', 'PY_100', 'PZ_100', 'E_101', 'PX_101', 'PY_101', 'PZ_101', 'E_102', 'PX_102', 'PY_102', 'PZ_102', 'E_103', 'PX_103', 'PY_103', 'PZ_103', 'E_104', 'PX_104', 'PY_104', 'PZ_104', 'E_105', 'PX_105', 'PY_105', 'PZ_105', 'E_106', 'PX_106', 'PY_106', 'PZ_106', 'E_107', 'PX_107', 'PY_107', 'PZ_107', 'E_108', 'PX_108', 'PY_108', 'PZ_108', 'E_109', 'PX_109', 'PY_109', 'PZ_109', 'E_110', 'PX_110', 'PY_110', 'PZ_110', 'E_111', 'PX_111', 'PY_111', 'PZ_111', 'E_112', 'PX_112', 'PY_112', 'PZ_112', 'E_113', 'PX_113', 'PY_113', 'PZ_113', 'E_114', 'PX_114', 'PY_114', 'PZ_114', 'E_115', 'PX_115', 'PY_115', 'PZ_115', 'E_116', 'PX_116', 'PY_116', 'PZ_116', 'E_117', 'PX_117', 'PY_117', 'PZ_117', 'E_118', 'PX_118', 'PY_118', 'PZ_118', 'E_119', 'PX_119', 'PY_119', 'PZ_119', 'E_120', 'PX_120', 'PY_120', 'PZ_120', 'E_121', 'PX_121', 'PY_121', 'PZ_121', 'E_122', 'PX_122', 'PY_122', 'PZ_122', 'E_123', 'PX_123', 'PY_123', 'PZ_123', 'E_124', 'PX_124', 'PY_124', 'PZ_124', 'E_125', 'PX_125', 'PY_125', 'PZ_125', 'E_126', 'PX_126', 'PY_126', 'PZ_126', 'E_127', 'PX_127', 'PY_127', 'PZ_127', 'E_128', 'PX_128', 'PY_128', 'PZ_128', 'E_129', 'PX_129', 'PY_129', 'PZ_129', 'E_130', 'PX_130', 'PY_130', 'PZ_130', 'E_131', 'PX_131', 'PY_131', 'PZ_131', 'E_132', 'PX_132', 'PY_132', 'PZ_132', 'E_133', 'PX_133', 'PY_133', 'PZ_133', 'E_134', 'PX_134', 'PY_134', 'PZ_134', 'E_135', 'PX_135', 'PY_135', 'PZ_135', 'E_136', 'PX_136', 'PY_136', 'PZ_136', 'E_137', 'PX_137', 'PY_137', 'PZ_137', 'E_138', 'PX_138', 'PY_138', 'PZ_138', 'E_139', 'PX_139', 'PY_139', 'PZ_139', 'E_140', 'PX_140', 'PY_140', 'PZ_140', 'E_141', 'PX_141', 'PY_141', 'PZ_141', 'E_142', 'PX_142', 'PY_142', 'PZ_142', 'E_143', 'PX_143', 'PY_143', 'PZ_143', 'E_144', 'PX_144', 'PY_144', 'PZ_144', 'E_145', 'PX_145', 'PY_145', 'PZ_145', 'E_146', 'PX_146', 'PY_146', 'PZ_146', 'E_147', 'PX_147', 'PY_147', 'PZ_147', 'E_148', 'PX_148', 'PY_148', 'PZ_148', 'E_149', 'PX_149', 'PY_149', 'PZ_149', 'E_150', 'PX_150', 'PY_150', 'PZ_150', 'E_151', 'PX_151', 'PY_151', 'PZ_151', 'E_152', 'PX_152', 'PY_152', 'PZ_152', 'E_153', 'PX_153', 'PY_153', 'PZ_153', 'E_154', 'PX_154', 'PY_154', 'PZ_154', 'E_155', 'PX_155', 'PY_155', 'PZ_155', 'E_156', 'PX_156', 'PY_156', 'PZ_156', 'E_157', 'PX_157', 'PY_157', 'PZ_157', 'E_158', 'PX_158', 'PY_158', 'PZ_158', 'E_159', 'PX_159', 'PY_159', 'PZ_159', 'E_160', 'PX_160', 'PY_160', 'PZ_160', 'E_161', 'PX_161', 'PY_161', 'PZ_161', 'E_162', 'PX_162', 'PY_162', 'PZ_162', 'E_163', 'PX_163', 'PY_163', 'PZ_163', 'E_164', 'PX_164', 'PY_164', 'PZ_164', 'E_165', 'PX_165', 'PY_165', 'PZ_165', 'E_166', 'PX_166', 'PY_166', 'PZ_166', 'E_167', 'PX_167', 'PY_167', 'PZ_167', 'E_168', 'PX_168', 'PY_168', 'PZ_168', 'E_169', 'PX_169', 'PY_169', 'PZ_169', 'E_170', 'PX_170', 'PY_170', 'PZ_170', 'E_171', 'PX_171', 'PY_171', 'PZ_171', 'E_172', 'PX_172', 'PY_172', 'PZ_172', 'E_173', 'PX_173', 'PY_173', 'PZ_173', 'E_174', 'PX_174', 'PY_174', 'PZ_174', 'E_175', 'PX_175', 'PY_175', 'PZ_175', 'E_176', 'PX_176', 'PY_176', 'PZ_176', 'E_177', 'PX_177', 'PY_177', 'PZ_177', 'E_178', 'PX_178', 'PY_178', 'PZ_178', 'E_179', 'PX_179', 'PY_179', 'PZ_179', 'E_180', 'PX_180', 'PY_180', 'PZ_180', 'E_181', 'PX_181', 'PY_181', 'PZ_181', 'E_182', 'PX_182', 'PY_182', 'PZ_182', 'E_183', 'PX_183', 'PY_183', 'PZ_183', 'E_184', 'PX_184', 'PY_184', 'PZ_184', 'E_185', 'PX_185', 'PY_185', 'PZ_185', 'E_186', 'PX_186', 'PY_186', 'PZ_186', 'E_187', 'PX_187', 'PY_187', 'PZ_187', 'E_188', 'PX_188', 'PY_188', 'PZ_188', 'E_189', 'PX_189', 'PY_189', 'PZ_189', 'E_190', 'PX_190', 'PY_190', 'PZ_190', 'E_191', 'PX_191', 'PY_191', 'PZ_191', 'E_192', 'PX_192', 'PY_192', 'PZ_192', 'E_193', 'PX_193', 'PY_193', 'PZ_193', 'E_194', 'PX_194', 'PY_194', 'PZ_194', 'E_195', 'PX_195', 'PY_195', 'PZ_195', 'E_196', 'PX_196', 'PY_196', 'PZ_196', 'E_197', 'PX_197', 'PY_197', 'PZ_197', 'E_198', 'PX_198', 'PY_198', 'PZ_198', 'E_199', 'PX_199', 'PY_199', 'PZ_199', 'truthE', 'truthPX', 'truthPY', 'truthPZ', 'ttv', 'is_signal_new'],

num_rows: 1211000

})

The Dataset object is one of the core data structures in 🤗 Datasets and behaves like an ordinary Python list, so we can query its length:

len(top_tagging_ds["train"])

1211000

or access a single element by its index:

top_tagging_ds["train"][0]

{'E_0': 474.0711364746094,

'PX_0': -250.34703063964844,

'PY_0': -223.65196228027344,

'PZ_0': -334.73809814453125,

'E_1': 103.23623657226562,

'PX_1': -48.8662223815918,

'PY_1': -56.790775299072266,

'PZ_1': -71.0254898071289,

'E_2': 105.25556945800781,

'PX_2': -55.415000915527344,

'PY_2': -49.96888732910156,

'PZ_2': -74.23626708984375,

'E_3': 40.17677688598633,

'PX_3': -21.760696411132812,

'PY_3': -18.71761131286621,

'PZ_3': -28.112215042114258,

'E_4': 22.4285831451416,

'PX_4': -11.835756301879883,

'PY_4': -10.374107360839844,

'PZ_4': -15.979177474975586,

'E_5': 20.334388732910156,

'PX_5': -10.950518608093262,

'PY_5': -9.545439720153809,

'PZ_5': -14.228776931762695,

'E_6': 19.030899047851562,

'PX_6': -10.243264198303223,

'PY_6': -9.004837036132812,

'PZ_6': -13.272662162780762,

'E_7': 13.460596084594727,

'PX_7': -7.3433637619018555,

'PY_7': -6.359743595123291,

'PZ_7': -9.317526817321777,

'E_8': 11.226107597351074,

'PX_8': -5.981515884399414,

'PY_8': -5.456268787384033,

'PZ_8': -7.776637554168701,

'E_9': 10.445060729980469,

'PX_9': -5.460624694824219,

'PY_9': -4.854524612426758,

'PZ_9': -7.464211463928223,

'E_10': 9.077269554138184,

'PX_10': -5.811364650726318,

'PY_10': -3.4854695796966553,

'PZ_10': -6.039566993713379,

'E_11': 9.056221008300781,

'PX_11': -4.758406162261963,

'PY_11': -4.0972113609313965,

'PZ_11': -6.525762557983398,

'E_12': 6.96318244934082,

'PX_12': -3.490816593170166,

'PY_12': -3.0960206985473633,

'PZ_12': -5.168632984161377,

'E_13': 5.772968769073486,

'PX_13': -2.934152364730835,

'PY_13': -2.7418923377990723,

'PZ_13': -4.147281646728516,

'E_14': 3.760998249053955,

'PX_14': -2.1263434886932373,

'PY_14': -1.7262251377105713,

'PZ_14': -2.5775797367095947,

'E_15': 2.9336676597595215,

'PX_15': -1.5918165445327759,

'PY_15': -1.277614951133728,

'PZ_15': -2.107184410095215,

'E_16': 2.729625940322876,

'PX_16': -1.4158698320388794,

'PY_16': -1.217659831047058,

'PZ_16': -1.9908478260040283,

'E_17': 2.7179172039031982,

'PX_17': -1.3310680389404297,

'PY_17': -1.0936245918273926,

'PZ_17': -2.102217197418213,

'E_18': 2.301811933517456,

'PX_18': -1.1343910694122314,

'PY_18': -1.1675245761871338,

'PZ_18': -1.6273847818374634,

'E_19': 1.5100955963134766,

'PX_19': -1.0334879159927368,

'PY_19': -0.7483676671981812,

'PZ_19': -0.8076121807098389,

'E_20': 1.340166687965393,

'PX_20': -0.8890589475631714,

'PY_20': -0.7178719639778137,

'PZ_20': -0.700200617313385,

'E_21': 1.0327688455581665,

'PX_21': -0.11786821484565735,

'PY_21': -0.49550384283065796,

'PZ_21': -0.8984400629997253,

'E_22': 0.4266541004180908,

'PX_22': -0.2976595461368561,

'PY_22': -0.10618320107460022,

'PZ_22': -0.2866315543651581,

'E_23': 0.0,

'PX_23': 0.0,

'PY_23': 0.0,

'PZ_23': 0.0,

'E_24': 0.0,

'PX_24': 0.0,

'PY_24': 0.0,

'PZ_24': 0.0,

'E_25': 0.0,

'PX_25': 0.0,

'PY_25': 0.0,

'PZ_25': 0.0,

'E_26': 0.0,

'PX_26': 0.0,

'PY_26': 0.0,

'PZ_26': 0.0,

'E_27': 0.0,

'PX_27': 0.0,

'PY_27': 0.0,

'PZ_27': 0.0,

'E_28': 0.0,

'PX_28': 0.0,

'PY_28': 0.0,

'PZ_28': 0.0,

'E_29': 0.0,

'PX_29': 0.0,

'PY_29': 0.0,

'PZ_29': 0.0,

'E_30': 0.0,

'PX_30': 0.0,

'PY_30': 0.0,

'PZ_30': 0.0,

'E_31': 0.0,

'PX_31': 0.0,

'PY_31': 0.0,

'PZ_31': 0.0,

'E_32': 0.0,

'PX_32': 0.0,

'PY_32': 0.0,

'PZ_32': 0.0,

'E_33': 0.0,

'PX_33': 0.0,

'PY_33': 0.0,

'PZ_33': 0.0,

'E_34': 0.0,

'PX_34': 0.0,

'PY_34': 0.0,

'PZ_34': 0.0,

'E_35': 0.0,

'PX_35': 0.0,

'PY_35': 0.0,

'PZ_35': 0.0,

'E_36': 0.0,

'PX_36': 0.0,

'PY_36': 0.0,

'PZ_36': 0.0,

'E_37': 0.0,

'PX_37': 0.0,

'PY_37': 0.0,

'PZ_37': 0.0,

'E_38': 0.0,

'PX_38': 0.0,

'PY_38': 0.0,

'PZ_38': 0.0,

'E_39': 0.0,

'PX_39': 0.0,

'PY_39': 0.0,

'PZ_39': 0.0,

'E_40': 0.0,

'PX_40': 0.0,

'PY_40': 0.0,

'PZ_40': 0.0,

'E_41': 0.0,

'PX_41': 0.0,

'PY_41': 0.0,

'PZ_41': 0.0,

'E_42': 0.0,

'PX_42': 0.0,

'PY_42': 0.0,

'PZ_42': 0.0,

'E_43': 0.0,

'PX_43': 0.0,

'PY_43': 0.0,

'PZ_43': 0.0,

'E_44': 0.0,

'PX_44': 0.0,

'PY_44': 0.0,

'PZ_44': 0.0,

'E_45': 0.0,

'PX_45': 0.0,

'PY_45': 0.0,

'PZ_45': 0.0,

'E_46': 0.0,

'PX_46': 0.0,

'PY_46': 0.0,

'PZ_46': 0.0,

'E_47': 0.0,

'PX_47': 0.0,

'PY_47': 0.0,

'PZ_47': 0.0,

'E_48': 0.0,

'PX_48': 0.0,

'PY_48': 0.0,

'PZ_48': 0.0,

'E_49': 0.0,

'PX_49': 0.0,

'PY_49': 0.0,

'PZ_49': 0.0,

'E_50': 0.0,

'PX_50': 0.0,

'PY_50': 0.0,

'PZ_50': 0.0,

'E_51': 0.0,

'PX_51': 0.0,

'PY_51': 0.0,

'PZ_51': 0.0,

'E_52': 0.0,

'PX_52': 0.0,

'PY_52': 0.0,

'PZ_52': 0.0,

'E_53': 0.0,

'PX_53': 0.0,

'PY_53': 0.0,

'PZ_53': 0.0,

'E_54': 0.0,

'PX_54': 0.0,

'PY_54': 0.0,

'PZ_54': 0.0,

'E_55': 0.0,

'PX_55': 0.0,

'PY_55': 0.0,

'PZ_55': 0.0,

'E_56': 0.0,

'PX_56': 0.0,

'PY_56': 0.0,

'PZ_56': 0.0,

'E_57': 0.0,

'PX_57': 0.0,

'PY_57': 0.0,

'PZ_57': 0.0,

'E_58': 0.0,

'PX_58': 0.0,

'PY_58': 0.0,

'PZ_58': 0.0,

'E_59': 0.0,

'PX_59': 0.0,

'PY_59': 0.0,

'PZ_59': 0.0,

'E_60': 0.0,

'PX_60': 0.0,

'PY_60': 0.0,

'PZ_60': 0.0,

'E_61': 0.0,

'PX_61': 0.0,

'PY_61': 0.0,

'PZ_61': 0.0,

'E_62': 0.0,

'PX_62': 0.0,

'PY_62': 0.0,

'PZ_62': 0.0,

'E_63': 0.0,

'PX_63': 0.0,

'PY_63': 0.0,

'PZ_63': 0.0,

'E_64': 0.0,

'PX_64': 0.0,

'PY_64': 0.0,

'PZ_64': 0.0,

'E_65': 0.0,

'PX_65': 0.0,

'PY_65': 0.0,

'PZ_65': 0.0,

'E_66': 0.0,

'PX_66': 0.0,

'PY_66': 0.0,

'PZ_66': 0.0,

'E_67': 0.0,

'PX_67': 0.0,

'PY_67': 0.0,

'PZ_67': 0.0,

'E_68': 0.0,

'PX_68': 0.0,

'PY_68': 0.0,

'PZ_68': 0.0,

'E_69': 0.0,

'PX_69': 0.0,

'PY_69': 0.0,

'PZ_69': 0.0,

'E_70': 0.0,

'PX_70': 0.0,

'PY_70': 0.0,

'PZ_70': 0.0,

'E_71': 0.0,

'PX_71': 0.0,

'PY_71': 0.0,

'PZ_71': 0.0,

'E_72': 0.0,

'PX_72': 0.0,

'PY_72': 0.0,

'PZ_72': 0.0,

'E_73': 0.0,

'PX_73': 0.0,

'PY_73': 0.0,

'PZ_73': 0.0,

'E_74': 0.0,

'PX_74': 0.0,

'PY_74': 0.0,

'PZ_74': 0.0,

'E_75': 0.0,

'PX_75': 0.0,

'PY_75': 0.0,

'PZ_75': 0.0,

'E_76': 0.0,

'PX_76': 0.0,

'PY_76': 0.0,

'PZ_76': 0.0,

'E_77': 0.0,

'PX_77': 0.0,

'PY_77': 0.0,

'PZ_77': 0.0,

'E_78': 0.0,

'PX_78': 0.0,

'PY_78': 0.0,

'PZ_78': 0.0,

'E_79': 0.0,

'PX_79': 0.0,

'PY_79': 0.0,

'PZ_79': 0.0,

'E_80': 0.0,

'PX_80': 0.0,

'PY_80': 0.0,

'PZ_80': 0.0,

'E_81': 0.0,

'PX_81': 0.0,

'PY_81': 0.0,

'PZ_81': 0.0,

'E_82': 0.0,

'PX_82': 0.0,

'PY_82': 0.0,

'PZ_82': 0.0,

'E_83': 0.0,

'PX_83': 0.0,

'PY_83': 0.0,

'PZ_83': 0.0,

'E_84': 0.0,

'PX_84': 0.0,

'PY_84': 0.0,

'PZ_84': 0.0,

'E_85': 0.0,

'PX_85': 0.0,

'PY_85': 0.0,

'PZ_85': 0.0,

'E_86': 0.0,

'PX_86': 0.0,

'PY_86': 0.0,

'PZ_86': 0.0,

'E_87': 0.0,

'PX_87': 0.0,

'PY_87': 0.0,

'PZ_87': 0.0,

'E_88': 0.0,

'PX_88': 0.0,

'PY_88': 0.0,

'PZ_88': 0.0,

'E_89': 0.0,

'PX_89': 0.0,

'PY_89': 0.0,

'PZ_89': 0.0,

'E_90': 0.0,

'PX_90': 0.0,

'PY_90': 0.0,

'PZ_90': 0.0,

'E_91': 0.0,

'PX_91': 0.0,

'PY_91': 0.0,

'PZ_91': 0.0,

'E_92': 0.0,

'PX_92': 0.0,

'PY_92': 0.0,

'PZ_92': 0.0,

'E_93': 0.0,

'PX_93': 0.0,

'PY_93': 0.0,

'PZ_93': 0.0,

'E_94': 0.0,

'PX_94': 0.0,

'PY_94': 0.0,

'PZ_94': 0.0,

'E_95': 0.0,

'PX_95': 0.0,

'PY_95': 0.0,

'PZ_95': 0.0,

'E_96': 0.0,

'PX_96': 0.0,

'PY_96': 0.0,

'PZ_96': 0.0,

'E_97': 0.0,

'PX_97': 0.0,

'PY_97': 0.0,

'PZ_97': 0.0,

'E_98': 0.0,

'PX_98': 0.0,

'PY_98': 0.0,

'PZ_98': 0.0,

'E_99': 0.0,

'PX_99': 0.0,

'PY_99': 0.0,

'PZ_99': 0.0,

'E_100': 0.0,

'PX_100': 0.0,

'PY_100': 0.0,

'PZ_100': 0.0,

'E_101': 0.0,

'PX_101': 0.0,

'PY_101': 0.0,

'PZ_101': 0.0,

'E_102': 0.0,

'PX_102': 0.0,

'PY_102': 0.0,

'PZ_102': 0.0,

'E_103': 0.0,

'PX_103': 0.0,

'PY_103': 0.0,

'PZ_103': 0.0,

'E_104': 0.0,

'PX_104': 0.0,

'PY_104': 0.0,

'PZ_104': 0.0,

'E_105': 0.0,

'PX_105': 0.0,

'PY_105': 0.0,

'PZ_105': 0.0,

'E_106': 0.0,

'PX_106': 0.0,

'PY_106': 0.0,

'PZ_106': 0.0,

'E_107': 0.0,

'PX_107': 0.0,

'PY_107': 0.0,

'PZ_107': 0.0,

'E_108': 0.0,

'PX_108': 0.0,

'PY_108': 0.0,

'PZ_108': 0.0,

'E_109': 0.0,

'PX_109': 0.0,

'PY_109': 0.0,

'PZ_109': 0.0,

'E_110': 0.0,

'PX_110': 0.0,

'PY_110': 0.0,

'PZ_110': 0.0,

'E_111': 0.0,

'PX_111': 0.0,

'PY_111': 0.0,

'PZ_111': 0.0,

'E_112': 0.0,

'PX_112': 0.0,

'PY_112': 0.0,

'PZ_112': 0.0,

'E_113': 0.0,

'PX_113': 0.0,

'PY_113': 0.0,

'PZ_113': 0.0,

'E_114': 0.0,

'PX_114': 0.0,

'PY_114': 0.0,

'PZ_114': 0.0,

'E_115': 0.0,

'PX_115': 0.0,

'PY_115': 0.0,

'PZ_115': 0.0,

'E_116': 0.0,

'PX_116': 0.0,

'PY_116': 0.0,

'PZ_116': 0.0,

'E_117': 0.0,

'PX_117': 0.0,

'PY_117': 0.0,

'PZ_117': 0.0,

'E_118': 0.0,

'PX_118': 0.0,

'PY_118': 0.0,

'PZ_118': 0.0,

'E_119': 0.0,

'PX_119': 0.0,

'PY_119': 0.0,

'PZ_119': 0.0,

'E_120': 0.0,

'PX_120': 0.0,

'PY_120': 0.0,

'PZ_120': 0.0,

'E_121': 0.0,

'PX_121': 0.0,

'PY_121': 0.0,

'PZ_121': 0.0,

'E_122': 0.0,

'PX_122': 0.0,

'PY_122': 0.0,

'PZ_122': 0.0,

'E_123': 0.0,

'PX_123': 0.0,

'PY_123': 0.0,

'PZ_123': 0.0,

'E_124': 0.0,

'PX_124': 0.0,

'PY_124': 0.0,

'PZ_124': 0.0,

'E_125': 0.0,

'PX_125': 0.0,

'PY_125': 0.0,

'PZ_125': 0.0,

'E_126': 0.0,

'PX_126': 0.0,

'PY_126': 0.0,

'PZ_126': 0.0,

'E_127': 0.0,

'PX_127': 0.0,

'PY_127': 0.0,

'PZ_127': 0.0,

'E_128': 0.0,

'PX_128': 0.0,

'PY_128': 0.0,

'PZ_128': 0.0,

'E_129': 0.0,

'PX_129': 0.0,

'PY_129': 0.0,

'PZ_129': 0.0,

'E_130': 0.0,

'PX_130': 0.0,

'PY_130': 0.0,

'PZ_130': 0.0,

'E_131': 0.0,

'PX_131': 0.0,

'PY_131': 0.0,

'PZ_131': 0.0,

'E_132': 0.0,

'PX_132': 0.0,

'PY_132': 0.0,

'PZ_132': 0.0,

'E_133': 0.0,

'PX_133': 0.0,

'PY_133': 0.0,

'PZ_133': 0.0,

'E_134': 0.0,

'PX_134': 0.0,

'PY_134': 0.0,

'PZ_134': 0.0,

'E_135': 0.0,

'PX_135': 0.0,

'PY_135': 0.0,

'PZ_135': 0.0,

'E_136': 0.0,

'PX_136': 0.0,

'PY_136': 0.0,

'PZ_136': 0.0,

'E_137': 0.0,

'PX_137': 0.0,

'PY_137': 0.0,

'PZ_137': 0.0,

'E_138': 0.0,

'PX_138': 0.0,

'PY_138': 0.0,

'PZ_138': 0.0,

'E_139': 0.0,

'PX_139': 0.0,

'PY_139': 0.0,

'PZ_139': 0.0,

'E_140': 0.0,

'PX_140': 0.0,

'PY_140': 0.0,

'PZ_140': 0.0,

'E_141': 0.0,

'PX_141': 0.0,

'PY_141': 0.0,

'PZ_141': 0.0,

'E_142': 0.0,

'PX_142': 0.0,

'PY_142': 0.0,

'PZ_142': 0.0,

'E_143': 0.0,

'PX_143': 0.0,

'PY_143': 0.0,

'PZ_143': 0.0,

'E_144': 0.0,

'PX_144': 0.0,

'PY_144': 0.0,

'PZ_144': 0.0,

'E_145': 0.0,

'PX_145': 0.0,

'PY_145': 0.0,

'PZ_145': 0.0,

'E_146': 0.0,

'PX_146': 0.0,

'PY_146': 0.0,

'PZ_146': 0.0,

'E_147': 0.0,

'PX_147': 0.0,

'PY_147': 0.0,

'PZ_147': 0.0,

'E_148': 0.0,

'PX_148': 0.0,

'PY_148': 0.0,

'PZ_148': 0.0,

'E_149': 0.0,

'PX_149': 0.0,

'PY_149': 0.0,

'PZ_149': 0.0,

'E_150': 0.0,

'PX_150': 0.0,

'PY_150': 0.0,

'PZ_150': 0.0,

'E_151': 0.0,

'PX_151': 0.0,

'PY_151': 0.0,

'PZ_151': 0.0,

'E_152': 0.0,

'PX_152': 0.0,

'PY_152': 0.0,

'PZ_152': 0.0,

'E_153': 0.0,

'PX_153': 0.0,

'PY_153': 0.0,

'PZ_153': 0.0,

'E_154': 0.0,

'PX_154': 0.0,

'PY_154': 0.0,

'PZ_154': 0.0,

'E_155': 0.0,

'PX_155': 0.0,

'PY_155': 0.0,

'PZ_155': 0.0,

'E_156': 0.0,

'PX_156': 0.0,

'PY_156': 0.0,

'PZ_156': 0.0,

'E_157': 0.0,

'PX_157': 0.0,

'PY_157': 0.0,

'PZ_157': 0.0,

'E_158': 0.0,

'PX_158': 0.0,

'PY_158': 0.0,

'PZ_158': 0.0,

'E_159': 0.0,

'PX_159': 0.0,

'PY_159': 0.0,

'PZ_159': 0.0,

'E_160': 0.0,

'PX_160': 0.0,

'PY_160': 0.0,

'PZ_160': 0.0,

'E_161': 0.0,

'PX_161': 0.0,

'PY_161': 0.0,

'PZ_161': 0.0,

'E_162': 0.0,

'PX_162': 0.0,

'PY_162': 0.0,

'PZ_162': 0.0,

'E_163': 0.0,

'PX_163': 0.0,

'PY_163': 0.0,

'PZ_163': 0.0,

'E_164': 0.0,

'PX_164': 0.0,

'PY_164': 0.0,

'PZ_164': 0.0,

'E_165': 0.0,

'PX_165': 0.0,

'PY_165': 0.0,

'PZ_165': 0.0,

'E_166': 0.0,

'PX_166': 0.0,

'PY_166': 0.0,

'PZ_166': 0.0,

'E_167': 0.0,

'PX_167': 0.0,

'PY_167': 0.0,

'PZ_167': 0.0,

'E_168': 0.0,

'PX_168': 0.0,

'PY_168': 0.0,

'PZ_168': 0.0,

'E_169': 0.0,

'PX_169': 0.0,

'PY_169': 0.0,

'PZ_169': 0.0,

'E_170': 0.0,

'PX_170': 0.0,

'PY_170': 0.0,

'PZ_170': 0.0,

'E_171': 0.0,

'PX_171': 0.0,

'PY_171': 0.0,

'PZ_171': 0.0,

'E_172': 0.0,

'PX_172': 0.0,

'PY_172': 0.0,

'PZ_172': 0.0,

'E_173': 0.0,

'PX_173': 0.0,

'PY_173': 0.0,

'PZ_173': 0.0,

'E_174': 0.0,

'PX_174': 0.0,

'PY_174': 0.0,

'PZ_174': 0.0,

'E_175': 0.0,

'PX_175': 0.0,

'PY_175': 0.0,

'PZ_175': 0.0,

'E_176': 0.0,

'PX_176': 0.0,

'PY_176': 0.0,

'PZ_176': 0.0,

'E_177': 0.0,

'PX_177': 0.0,

'PY_177': 0.0,

'PZ_177': 0.0,

'E_178': 0.0,

'PX_178': 0.0,

'PY_178': 0.0,

'PZ_178': 0.0,

'E_179': 0.0,

'PX_179': 0.0,

'PY_179': 0.0,

'PZ_179': 0.0,

'E_180': 0.0,

'PX_180': 0.0,

'PY_180': 0.0,

'PZ_180': 0.0,

'E_181': 0.0,

'PX_181': 0.0,

'PY_181': 0.0,

'PZ_181': 0.0,

'E_182': 0.0,

'PX_182': 0.0,

'PY_182': 0.0,

'PZ_182': 0.0,

'E_183': 0.0,

'PX_183': 0.0,

'PY_183': 0.0,

'PZ_183': 0.0,

'E_184': 0.0,

'PX_184': 0.0,

'PY_184': 0.0,

'PZ_184': 0.0,

'E_185': 0.0,

'PX_185': 0.0,

'PY_185': 0.0,

'PZ_185': 0.0,

'E_186': 0.0,

'PX_186': 0.0,

'PY_186': 0.0,

'PZ_186': 0.0,

'E_187': 0.0,

'PX_187': 0.0,

'PY_187': 0.0,

'PZ_187': 0.0,

'E_188': 0.0,

'PX_188': 0.0,

'PY_188': 0.0,

'PZ_188': 0.0,

'E_189': 0.0,

'PX_189': 0.0,

'PY_189': 0.0,

'PZ_189': 0.0,

'E_190': 0.0,

'PX_190': 0.0,

'PY_190': 0.0,

'PZ_190': 0.0,

'E_191': 0.0,

'PX_191': 0.0,

'PY_191': 0.0,

'PZ_191': 0.0,

'E_192': 0.0,

'PX_192': 0.0,

'PY_192': 0.0,

'PZ_192': 0.0,

'E_193': 0.0,

'PX_193': 0.0,

'PY_193': 0.0,

'PZ_193': 0.0,

'E_194': 0.0,

'PX_194': 0.0,

'PY_194': 0.0,

'PZ_194': 0.0,

'E_195': 0.0,

'PX_195': 0.0,

'PY_195': 0.0,

'PZ_195': 0.0,

'E_196': 0.0,

'PX_196': 0.0,

'PY_196': 0.0,

'PZ_196': 0.0,

'E_197': 0.0,

'PX_197': 0.0,

'PY_197': 0.0,

'PZ_197': 0.0,

'E_198': 0.0,

'PX_198': 0.0,

'PY_198': 0.0,

'PZ_198': 0.0,

'E_199': 0.0,

'PX_199': 0.0,

'PY_199': 0.0,

'PZ_199': 0.0,

'truthE': 0.0,

'truthPX': 0.0,

'truthPY': 0.0,

'truthPZ': 0.0,

'ttv': 0,

'is_signal_new': 0}

Here we see that a single row is repesented as a dictionary where the keys correspond to the column names. Since we won’t need the top-quark 4-vector columns, let’s remove them along with the ttv one:

top_tagging_ds = top_tagging_ds.remove_columns(

["truthE", "truthPX", "truthPY", "truthPZ", "ttv"]

)

Although 🤗 Datasets provides a lot of low-level functionality for preprocessing datasets, it is often conventient to convert a Dataset object to a Pandas DataFrame. To enable the conversion, 🤗 Datasets provides a set_format() method that allows us to change the output format of the dataset:

# Convert output format to DataFrames

top_tagging_ds.set_format("pandas")

# Create DataFrames for the training and test splits

train_df, test_df = top_tagging_ds["train"][:], top_tagging_ds["test"][:]

# Peek at first few rows

train_df.head()

| E_0 | PX_0 | PY_0 | PZ_0 | E_1 | PX_1 | PY_1 | PZ_1 | E_2 | PX_2 | ... | PZ_197 | E_198 | PX_198 | PY_198 | PZ_198 | E_199 | PX_199 | PY_199 | PZ_199 | is_signal_new | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 474.071136 | -250.347031 | -223.651962 | -334.738098 | 103.236237 | -48.866222 | -56.790775 | -71.025490 | 105.255569 | -55.415001 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0 |

| 1 | 150.504532 | 120.062393 | 76.852005 | -48.274265 | 82.257057 | 63.801739 | 42.754807 | -29.454842 | 48.573559 | 36.763199 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0 |

| 2 | 251.645386 | 10.427651 | -147.573746 | 203.564880 | 104.147797 | 10.718256 | -54.497948 | 88.101395 | 78.043213 | 5.724113 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0 |

| 3 | 451.566132 | 129.885437 | -99.066292 | -420.984100 | 208.410919 | 59.033958 | -46.177090 | -194.467941 | 190.183304 | 54.069675 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0 |

| 4 | 399.093903 | -168.432083 | -47.205597 | -358.717438 | 273.691956 | -121.926941 | -30.803854 | -243.088928 | 152.837219 | -44.400204 | ... | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0 |

5 rows × 801 columns

As we can see, each row consists of 4-vectors \((E_i, p_{x_i}, p_{y_i}, p_{z_i})\) that correspond to the maximum 200 particles that make up each jet. We can also see that each jet has been padded with zeros, since most won’t have 200 particles. We also have an is_signal_new column that indicates whether the jet is a top quark signal (1) or QCD background (0).

Now that we’ve had a look at a sample of the raw data, let’s take a look at how we can convert it to a format that is suitable for neural networks!

Introducing fastai#

To train our model, we’ll use the fastai library. fastai is the most popular framework for training deep neural networks with PyTorch and provides various application-specific classes for different types of deep learning data structures and architectures. It is also designed with a layered API, which means:

We can use high-level components to quickly and easily get state-of-the-art results in standard deep learning domains

Low-level components can be mixed and matched to build new approaches

In particular, this approach will allow us in later lessons to use pure PyTorch code to define our models, and then let fastai take care of the training loop (which is often an error-prone process).

Basics of the API

The most common steps one takes when training a model in fastai are:

Create

DataLoadersto feed batches of data to the modelCreate a

Learnerwhich wraps the architecture, optimizer, and data, and automatically chooses an appropriate loss function where possibleFind a good learning rate

Train your model

Evaluate your model

Let’s go through each of these steps to build a neural network that can classify top quark jets from the QCD background!

From data to DataLoaders#

To wrangle our data in a format that’s suitable for training neural nets, we need to create an object called DataLoaders. To turn our dataset into a DataLoaders object we need to specify:

What type of data we are dealing with (tabular, images, etc)

How to get the examples

How to label each example

How to create the validation set

fastai provides a number of classes for different kinds of deep learning datasets and problems. In our case, the data is in a tabular format (i.e. a table of rows and columns), so we can use the TabularDataLoaders class:

# Downsample to ~0.5 if you're running on Colab / Kaggle which have limited RAM

frac_of_samples = 1.0

train_df = train_df.sample(int(frac_of_samples * len(train_df)), random_state=42)

features = list(train_df.drop(columns=["is_signal_new"]).columns)

splits = RandomSplitter(valid_pct=0.20, seed=42)(range_of(train_df))

dls = TabularDataLoaders.from_df(

df=train_df,

cont_names=features,

y_names="is_signal_new",

y_block=CategoryBlock,

splits=splits,

bs=1024,

)

Let’s unpack this code a bit. The first thing we’ve specified is which columns of our dataset correspond to continuous features via the cont_names argument. To do this, we’ve simply grabbed all column names from our DataFrame, except for the label column is_signal_new. Next, we’ve specified which column is the target in y_names and that this is a categorical feature with CategoryBlock. Finally we’ve specified the training and validation splits with RandomSplitter and picked a batch size of 1,024 examples.

After we’ve defined a DataLoaders object, we can take a look at the data by using the show_batch() method:

dls.show_batch()

| E_0 | PX_0 | PY_0 | PZ_0 | E_1 | PX_1 | PY_1 | PZ_1 | E_2 | PX_2 | PY_2 | PZ_2 | E_3 | PX_3 | PY_3 | PZ_3 | E_4 | PX_4 | PY_4 | PZ_4 | E_5 | PX_5 | PY_5 | PZ_5 | E_6 | PX_6 | PY_6 | PZ_6 | E_7 | PX_7 | PY_7 | PZ_7 | E_8 | PX_8 | PY_8 | PZ_8 | E_9 | PX_9 | PY_9 | PZ_9 | E_10 | PX_10 | PY_10 | PZ_10 | E_11 | PX_11 | PY_11 | PZ_11 | E_12 | PX_12 | PY_12 | PZ_12 | E_13 | PX_13 | PY_13 | PZ_13 | E_14 | PX_14 | PY_14 | PZ_14 | E_15 | PX_15 | PY_15 | PZ_15 | E_16 | PX_16 | PY_16 | PZ_16 | E_17 | PX_17 | PY_17 | PZ_17 | E_18 | PX_18 | PY_18 | PZ_18 | E_19 | PX_19 | PY_19 | PZ_19 | E_20 | PX_20 | PY_20 | PZ_20 | E_21 | PX_21 | PY_21 | PZ_21 | E_22 | PX_22 | PY_22 | PZ_22 | E_23 | PX_23 | PY_23 | PZ_23 | E_24 | PX_24 | PY_24 | PZ_24 | E_25 | PX_25 | PY_25 | PZ_25 | E_26 | PX_26 | PY_26 | PZ_26 | E_27 | PX_27 | PY_27 | PZ_27 | E_28 | PX_28 | PY_28 | PZ_28 | E_29 | PX_29 | PY_29 | PZ_29 | E_30 | PX_30 | PY_30 | PZ_30 | E_31 | PX_31 | PY_31 | PZ_31 | E_32 | PX_32 | PY_32 | PZ_32 | E_33 | PX_33 | PY_33 | PZ_33 | E_34 | PX_34 | PY_34 | PZ_34 | E_35 | PX_35 | PY_35 | PZ_35 | E_36 | PX_36 | PY_36 | PZ_36 | E_37 | PX_37 | PY_37 | PZ_37 | E_38 | PX_38 | PY_38 | PZ_38 | E_39 | PX_39 | PY_39 | PZ_39 | E_40 | PX_40 | PY_40 | PZ_40 | E_41 | PX_41 | PY_41 | PZ_41 | E_42 | PX_42 | PY_42 | PZ_42 | E_43 | PX_43 | PY_43 | PZ_43 | E_44 | PX_44 | PY_44 | PZ_44 | E_45 | PX_45 | PY_45 | PZ_45 | E_46 | PX_46 | PY_46 | PZ_46 | E_47 | PX_47 | PY_47 | PZ_47 | E_48 | PX_48 | PY_48 | PZ_48 | E_49 | PX_49 | PY_49 | PZ_49 | E_50 | PX_50 | PY_50 | PZ_50 | E_51 | PX_51 | PY_51 | PZ_51 | E_52 | PX_52 | PY_52 | PZ_52 | E_53 | PX_53 | PY_53 | PZ_53 | E_54 | PX_54 | PY_54 | PZ_54 | E_55 | PX_55 | PY_55 | PZ_55 | E_56 | PX_56 | PY_56 | PZ_56 | E_57 | PX_57 | PY_57 | PZ_57 | E_58 | PX_58 | PY_58 | PZ_58 | E_59 | PX_59 | PY_59 | PZ_59 | E_60 | PX_60 | PY_60 | PZ_60 | E_61 | PX_61 | PY_61 | PZ_61 | E_62 | PX_62 | PY_62 | PZ_62 | E_63 | PX_63 | PY_63 | PZ_63 | E_64 | PX_64 | PY_64 | PZ_64 | E_65 | PX_65 | PY_65 | PZ_65 | E_66 | PX_66 | PY_66 | PZ_66 | E_67 | PX_67 | PY_67 | PZ_67 | E_68 | PX_68 | PY_68 | PZ_68 | E_69 | PX_69 | PY_69 | PZ_69 | E_70 | PX_70 | PY_70 | PZ_70 | E_71 | PX_71 | PY_71 | PZ_71 | E_72 | PX_72 | PY_72 | PZ_72 | E_73 | PX_73 | PY_73 | PZ_73 | E_74 | PX_74 | PY_74 | PZ_74 | E_75 | PX_75 | PY_75 | PZ_75 | E_76 | PX_76 | PY_76 | PZ_76 | E_77 | PX_77 | PY_77 | PZ_77 | E_78 | PX_78 | PY_78 | PZ_78 | E_79 | PX_79 | PY_79 | PZ_79 | E_80 | PX_80 | PY_80 | PZ_80 | E_81 | PX_81 | PY_81 | PZ_81 | E_82 | PX_82 | PY_82 | PZ_82 | E_83 | PX_83 | PY_83 | PZ_83 | E_84 | PX_84 | PY_84 | PZ_84 | E_85 | PX_85 | PY_85 | PZ_85 | E_86 | PX_86 | PY_86 | PZ_86 | E_87 | PX_87 | PY_87 | PZ_87 | E_88 | PX_88 | PY_88 | PZ_88 | E_89 | PX_89 | PY_89 | PZ_89 | E_90 | PX_90 | PY_90 | PZ_90 | E_91 | PX_91 | PY_91 | PZ_91 | E_92 | PX_92 | PY_92 | PZ_92 | E_93 | PX_93 | PY_93 | PZ_93 | E_94 | PX_94 | PY_94 | PZ_94 | E_95 | PX_95 | PY_95 | PZ_95 | E_96 | PX_96 | PY_96 | PZ_96 | E_97 | PX_97 | PY_97 | PZ_97 | E_98 | PX_98 | PY_98 | PZ_98 | E_99 | PX_99 | PY_99 | PZ_99 | E_100 | PX_100 | PY_100 | PZ_100 | E_101 | PX_101 | PY_101 | PZ_101 | E_102 | PX_102 | PY_102 | PZ_102 | E_103 | PX_103 | PY_103 | PZ_103 | E_104 | PX_104 | PY_104 | PZ_104 | E_105 | PX_105 | PY_105 | PZ_105 | E_106 | PX_106 | PY_106 | PZ_106 | E_107 | PX_107 | PY_107 | PZ_107 | E_108 | PX_108 | PY_108 | PZ_108 | E_109 | PX_109 | PY_109 | PZ_109 | E_110 | PX_110 | PY_110 | PZ_110 | E_111 | PX_111 | PY_111 | PZ_111 | E_112 | PX_112 | PY_112 | PZ_112 | E_113 | PX_113 | PY_113 | PZ_113 | E_114 | PX_114 | PY_114 | PZ_114 | E_115 | PX_115 | PY_115 | PZ_115 | E_116 | PX_116 | PY_116 | PZ_116 | E_117 | PX_117 | PY_117 | PZ_117 | E_118 | PX_118 | PY_118 | PZ_118 | E_119 | PX_119 | PY_119 | PZ_119 | E_120 | PX_120 | PY_120 | PZ_120 | E_121 | PX_121 | PY_121 | PZ_121 | E_122 | PX_122 | PY_122 | PZ_122 | E_123 | PX_123 | PY_123 | PZ_123 | E_124 | PX_124 | PY_124 | PZ_124 | E_125 | PX_125 | PY_125 | PZ_125 | E_126 | PX_126 | PY_126 | PZ_126 | E_127 | PX_127 | PY_127 | PZ_127 | E_128 | PX_128 | PY_128 | PZ_128 | E_129 | PX_129 | PY_129 | PZ_129 | E_130 | PX_130 | PY_130 | PZ_130 | E_131 | PX_131 | PY_131 | PZ_131 | E_132 | PX_132 | PY_132 | PZ_132 | E_133 | PX_133 | PY_133 | PZ_133 | E_134 | PX_134 | PY_134 | PZ_134 | E_135 | PX_135 | PY_135 | PZ_135 | E_136 | PX_136 | PY_136 | PZ_136 | E_137 | PX_137 | PY_137 | PZ_137 | E_138 | PX_138 | PY_138 | PZ_138 | E_139 | PX_139 | PY_139 | PZ_139 | E_140 | PX_140 | PY_140 | PZ_140 | E_141 | PX_141 | PY_141 | PZ_141 | E_142 | PX_142 | PY_142 | PZ_142 | E_143 | PX_143 | PY_143 | PZ_143 | E_144 | PX_144 | PY_144 | PZ_144 | E_145 | PX_145 | PY_145 | PZ_145 | E_146 | PX_146 | PY_146 | PZ_146 | E_147 | PX_147 | PY_147 | PZ_147 | E_148 | PX_148 | PY_148 | PZ_148 | E_149 | PX_149 | PY_149 | PZ_149 | E_150 | PX_150 | PY_150 | PZ_150 | E_151 | PX_151 | PY_151 | PZ_151 | E_152 | PX_152 | PY_152 | PZ_152 | E_153 | PX_153 | PY_153 | PZ_153 | E_154 | PX_154 | PY_154 | PZ_154 | E_155 | PX_155 | PY_155 | PZ_155 | E_156 | PX_156 | PY_156 | PZ_156 | E_157 | PX_157 | PY_157 | PZ_157 | E_158 | PX_158 | PY_158 | PZ_158 | E_159 | PX_159 | PY_159 | PZ_159 | E_160 | PX_160 | PY_160 | PZ_160 | E_161 | PX_161 | PY_161 | PZ_161 | E_162 | PX_162 | PY_162 | PZ_162 | E_163 | PX_163 | PY_163 | PZ_163 | E_164 | PX_164 | PY_164 | PZ_164 | E_165 | PX_165 | PY_165 | PZ_165 | E_166 | PX_166 | PY_166 | PZ_166 | E_167 | PX_167 | PY_167 | PZ_167 | E_168 | PX_168 | PY_168 | PZ_168 | E_169 | PX_169 | PY_169 | PZ_169 | E_170 | PX_170 | PY_170 | PZ_170 | E_171 | PX_171 | PY_171 | PZ_171 | E_172 | PX_172 | PY_172 | PZ_172 | E_173 | PX_173 | PY_173 | PZ_173 | E_174 | PX_174 | PY_174 | PZ_174 | E_175 | PX_175 | PY_175 | PZ_175 | E_176 | PX_176 | PY_176 | PZ_176 | E_177 | PX_177 | PY_177 | PZ_177 | E_178 | PX_178 | PY_178 | PZ_178 | E_179 | PX_179 | PY_179 | PZ_179 | E_180 | PX_180 | PY_180 | PZ_180 | E_181 | PX_181 | PY_181 | PZ_181 | E_182 | PX_182 | PY_182 | PZ_182 | E_183 | PX_183 | PY_183 | PZ_183 | E_184 | PX_184 | PY_184 | PZ_184 | E_185 | PX_185 | PY_185 | PZ_185 | E_186 | PX_186 | PY_186 | PZ_186 | E_187 | PX_187 | PY_187 | PZ_187 | E_188 | PX_188 | PY_188 | PZ_188 | E_189 | PX_189 | PY_189 | PZ_189 | E_190 | PX_190 | PY_190 | PZ_190 | E_191 | PX_191 | PY_191 | PZ_191 | E_192 | PX_192 | PY_192 | PZ_192 | E_193 | PX_193 | PY_193 | PZ_193 | E_194 | PX_194 | PY_194 | PZ_194 | E_195 | PX_195 | PY_195 | PZ_195 | E_196 | PX_196 | PY_196 | PZ_196 | E_197 | PX_197 | PY_197 | PZ_197 | E_198 | PX_198 | PY_198 | PZ_198 | E_199 | PX_199 | PY_199 | PZ_199 | is_signal_new | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 156.651123 | 147.405258 | 26.006823 | -46.205074 | 66.166000 | 61.714252 | 13.618260 | -19.591679 | 59.187538 | 43.524006 | 18.544239 | -35.565948 | 52.280418 | 39.901722 | 14.766282 | -30.381767 | 46.479649 | 37.455952 | -4.363357 | -27.172979 | 37.165035 | 35.369465 | 1.354363 | -11.331660 | 36.232121 | 27.362452 | 10.842762 | -21.130484 | 35.059006 | 26.817917 | 10.583280 | -19.948118 | 27.499258 | 21.504807 | -7.419861 | -15.449861 | 24.723345 | 18.445026 | 7.439287 | -14.686109 | 21.618416 | 17.497467 | 5.555872 | -11.416079 | 20.788595 | 15.854955 | 6.082806 | -11.991064 | 18.213100 | 13.441398 | 6.698515 | -10.304162 | 12.677663 | 12.036289 | 2.944411 | -2.679800 | 12.660934 | 11.887339 | 1.900199 | -3.921694 | 9.484063 | 7.706819 | 2.073808 | -5.123643 | 8.043362 | 6.180357 | 2.354782 | -4.577538 | 7.829610 | 5.816335 | 2.422960 | -4.647829 | 7.204622 | 5.786285 | -0.912594 | -4.194359 | 6.569118 | 4.983078 | -0.247118 | -4.273309 | 5.204410 | 4.723341 | 0.999273 | -1.943549 | 5.212553 | 4.281167 | 1.108515 | -2.759259 | 5.343522 | 4.059355 | 1.723231 | -3.017504 | 3.995430 | 2.993573 | 1.290984 | -2.309836 | 3.862169 | 3.138426 | 0.844511 | -2.086487 | 3.488208 | 3.158298 | 0.699826 | -1.304989 | 2.978119 | 2.682876 | -0.341816 | -1.246809 | 3.168274 | 2.370644 | -0.752072 | -1.962752 | 2.761582 | 2.272017 | 0.939171 | -1.257868 | 3.092704 | 2.281398 | -0.749264 | -1.949011 | 3.142989 | 2.093421 | 1.106632 | -2.066720 | 2.061056 | 1.755722 | 0.585744 | -0.906805 | 2.281940 | 1.579042 | -0.750299 | -1.466605 | 1.614905 | 1.292173 | -0.374225 | -0.893399 | 1.415592 | 1.206074 | 0.533570 | -0.514383 | 1.675033 | 1.266876 | 0.103127 | -1.090928 | 1.120713 | 1.010384 | 0.072985 | -0.479369 | 0.886037 | 0.871607 | 0.097665 | -0.125795 | 0.932767 | 0.800974 | 0.097008 | -0.468065 | 1.065342 | 0.602590 | 0.389746 | -0.787360 | 0.728177 | 0.659168 | 0.136783 | -0.277542 | 0.746282 | 0.631983 | 0.138891 | -0.371811 | 0.668374 | 0.626190 | -0.143476 | -0.184459 | 0.616981 | 0.575796 | 0.220805 | -0.019220 | 0.595697 | 0.543712 | -0.019745 | -0.242575 | 0.647482 | 0.387422 | 0.197245 | -0.479825 | 0.660883 | 0.346850 | 0.072733 | -0.557828 | 0.000000 | 0.000000 | 0.000000 | 0.00000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.00000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.00000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.00000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.00000 | 0.00000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.00000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.0000 | 0.000000 | 0.000000 | 0.000000 | 0.00000 | 0.00000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1 |

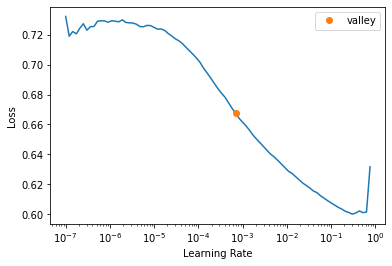

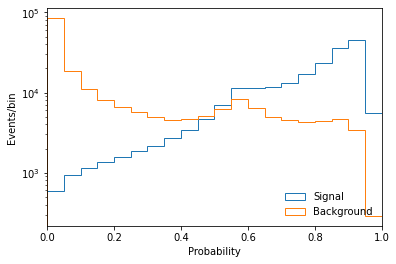

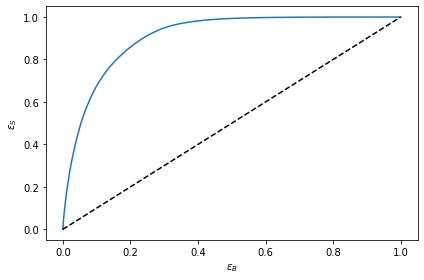

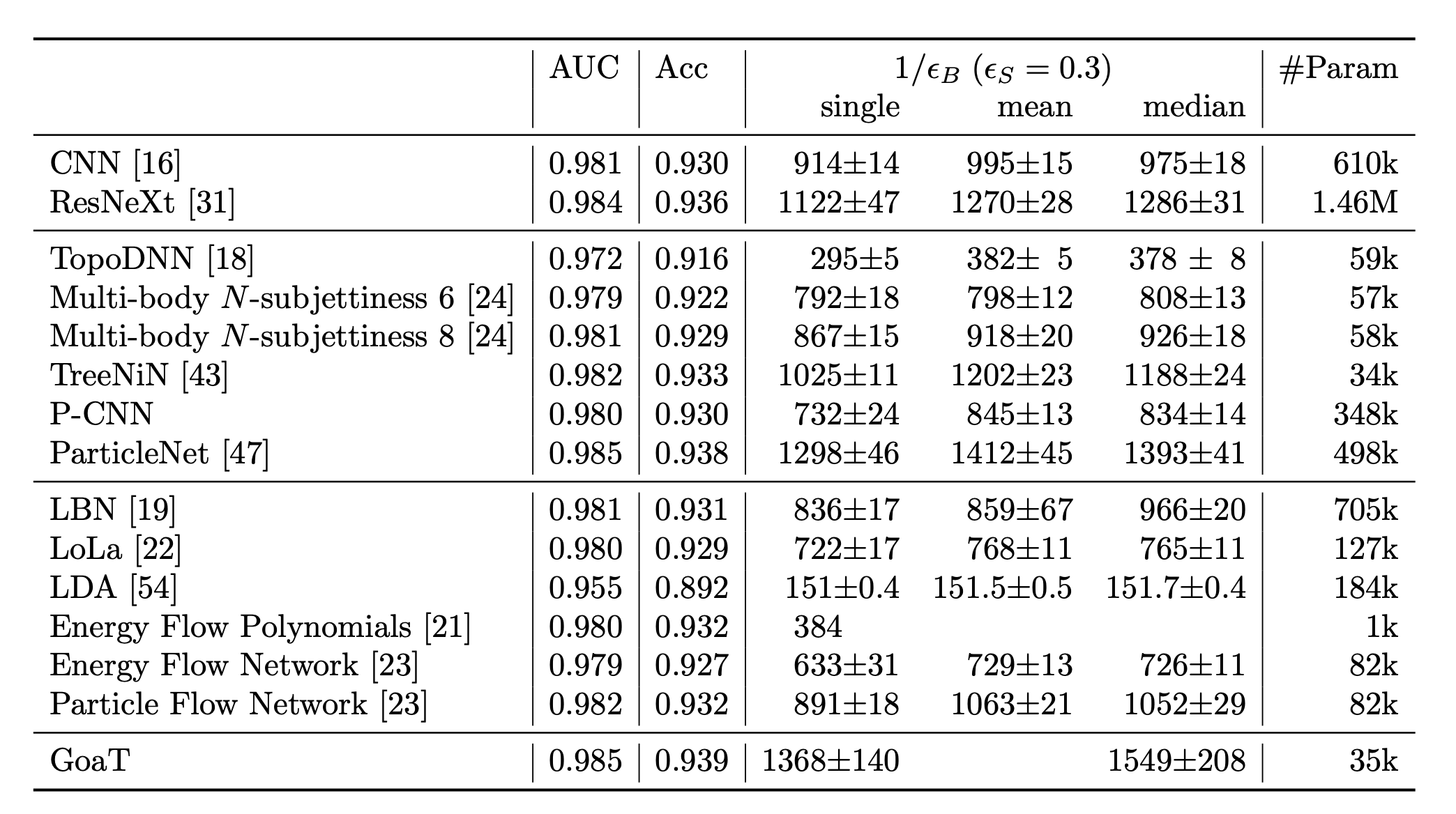

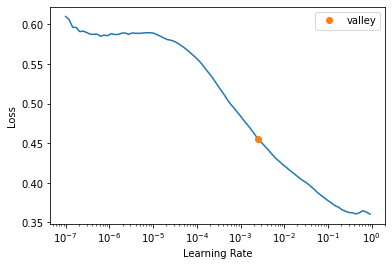

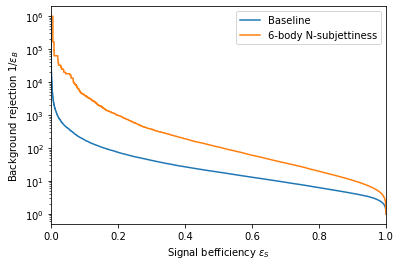

| 1 | 217.770920 | 112.669815 | -55.818962 | -177.803070 | 169.487793 | 57.047081 | -86.189850 | -134.324432 | 79.572060 | 52.154568 | -31.429808 | -51.222851 | 56.272415 | 34.468712 | -25.402637 | -36.512993 | 47.898685 | 33.165764 | -16.905451 | -30.141701 | 38.172230 | 26.431019 | -13.472577 | -24.021032 | 43.246582 | 14.914506 | -23.153084 | -33.343048 | 42.543930 | 22.392347 | -10.330865 | -34.667595 | 32.264137 | 20.407352 | -13.483160 | -21.040884 | 28.707653 | 18.947687 | -11.550921 | -18.212378 | 17.897223 | 10.066465 | -7.947463 | -12.482575 | 15.136470 | 9.821433 | -5.684728 | -10.016788 | 12.714173 | 7.650305 | -4.211721 | -9.240370 | 10.947527 | 6.395720 | -3.739368 | -8.059792 | 9.969221 | 6.233003 | -3.332090 | -7.030806 | 9.037607 | 5.644869 | -2.770133 | -6.491545 | 9.774871 | 3.194082 | -5.116557 | -7.691995 | 7.610077 | 4.280361 | -3.379340 | -5.307716 | 7.548386 | 4.600593 | -2.243847 | -5.547777 | 7.722602 | 4.280883 | -2.738911 | -5.814722 | 7.851254 | 2.219324 | -3.738460 | -6.537639 | 7.487554 | 3.762560 | -2.020533 | -6.150126 | 3.724781 | 2.421021 | -1.775541 | -2.204564 | 3.201555 | 2.680049 | -1.256285 | -1.220262 | 3.649110 | 2.674477 | -1.156612 | -2.196685 | 3.758146 | 2.474789 | -1.060927 | -2.621739 | 3.019944 | 1.376533 | -1.026755 | -2.484149 | 2.923555 | 1.332597 | -0.993983 | -2.404861 | 2.215418 | 1.350253 | -0.658559 | -1.628248 | 1.959735 | 0.949403 | -0.752075 | -1.540642 | 0.888710 | 0.648152 | -0.440543 | -0.419079 | 0.848160 | 0.685332 | -0.233436 | -0.441818 | 0.675687 | 0.371343 | -0.327507 | -0.459779 | 0.911277 | 0.391090 | -0.264506 | -0.779430 | 0.496820 | 0.104868 | -0.304911 | -0.377972 | 0.500294 | 0.158566 | -0.132538 | -0.455614 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.00000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.00000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.00000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.00000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.00000 | 0.00000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.00000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.0000 | 0.000000 | 0.000000 | 0.000000 | 0.00000 | 0.00000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 | 1 |