Deep Learning for Particle Physicists

Contents

Deep Learning for Particle Physicists#

Welcome to the graduate course on deep learning at the University of Bern’s Albert Einstein Center for Fundamental Physics!

Why all the fuss?#

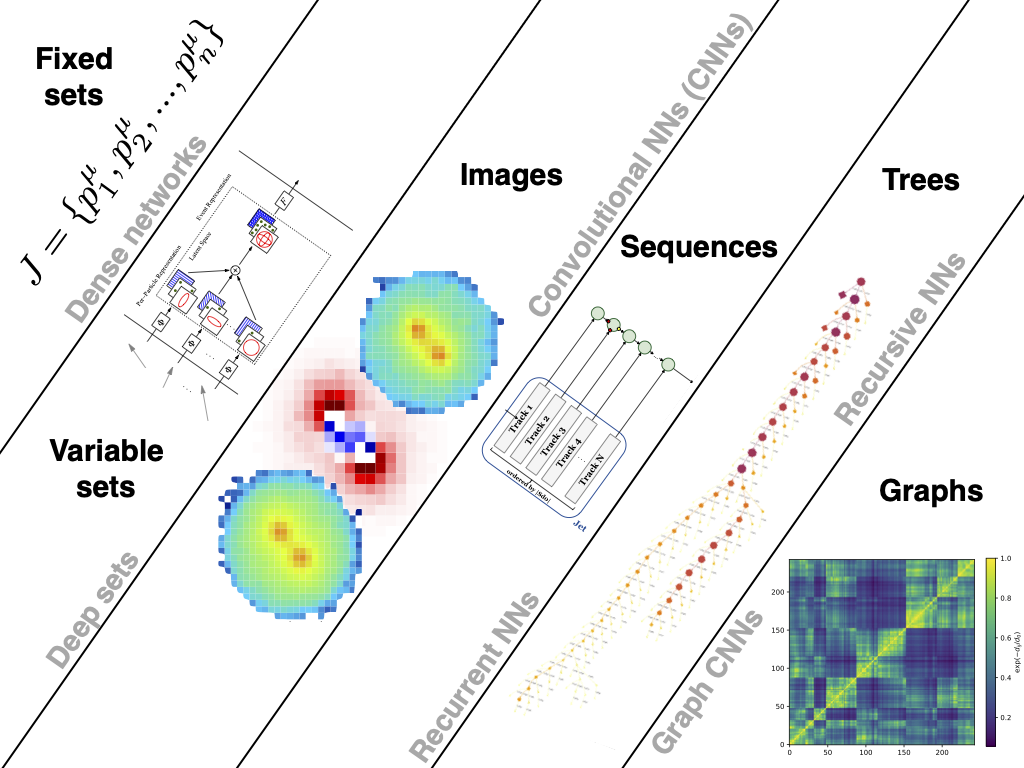

Fig. 1 Figure reference: Jet Substructure at the Large Hadron Collider#

Deep learning is a subfield of artificial intelligence that focuses on using neural networks to parse data, learn from it, and then make predictions about something in the world. In the last decade, this framework has led to significant advances in computer vision, natural language processing, and reinforcement learning. More recently, deep learning has begun to attract interest in the physical sciences and is rapidly becoming an important part of the physicist’s toolkit, especially in data-rich fields like high-energy particle physics and cosmology.

This course provides students with a hands-on introduction to the methods of deep learning, with an emphasis on applying these methods to solve particle physics problems. A tentative list of the topics covered in this course includes:

Jet tagging with convolutional neural networks

Transformer neural networks for sequential data

Normalizing flows for physics simulations such as lattice QFT

Throughout the course, students will learn how to implement neural networks from scratch, as well as core algorithms such as backpropagation and stochastic gradient descent. If time permits, we’ll explore how symmetries can be encoded in graph neural networks, along with symbolic regression techniques to extract equations from data.

Prerequisites#

Although no prior knowledge of deep learning is required, we do recommend having some familiarity with the core concepts of machine learning. This course is hands on, which means you can expect to be running a lot of code in fastai and PyTorch. You don’t need to know either of these frameworks, but we assume that you’re comfortable programming in Python and data analysis libraries such as NumPy. A useful precursor to the material covered in this course is Practical Machine Learning for Physicists.

Getting started#

You can run the Jupyter notebooks from this course on cloud platforms like Google Colab or your local machine. Note that each notebook requires a GPU to run in a reasonable amount of time, so we recommend one of the cloud platforms as they come pre-installed with CUDA.

Running on a cloud platform#

To run these notebooks on a cloud platform, just click on one of the badges in the table below:

Lecture |

Colab |

Kaggle |

Gradient |

Studio Lab |

|---|---|---|---|---|

1 - Jet tagging with neural networks |

||||

2 - Gradient descent |

||||

3 - Neural network deep dive |

||||

4 - Jet images and transfer learning with CNNs |

||||

5 - Convolutional neural networks |

||||

6 - Generating hep-ph titles with Transformers |

Nowadays, the GPUs on Colab tend to be K80s (which have limited memory), so we recommend using Kaggle, Gradient, or SageMaker Studio Lab. These platforms tend to provide more performant GPUs like P100s, all for free!

Note: some cloud platforms like Kaggle require you to restart the notebook after installing new packages.

Running on your machine#

To run the notebooks on your own machine, first clone the repository and navigate to it:

$ git clone https://github.com/nlp-with-transformers/notebooks.git

$ cd notebooks

Next, run the following command to create a conda virtual environment that contains all the libraries needed to run the notebooks:

$ conda env create -f environment.yml

Recommended references#

Deep learning#

Deep Learning for Coders with Fastai and PyTorch by Jeremy Howard and Sylvain Gugger. A highly accessible and practical book that will serve as a guide for these lectures.

Hands-On Machine Learning with Scikit-Learn, Keras, and TensorFlow by Aurélien Géron. An excellent book that covers both machine learning and deep learning.

Particle physics#

The Particle Data Group has a wonderfully concise review on machine learning. You can find it under Mathematical Tools > Machine Learning.

Jet Substructure at the Large Hadron Collider by A. Larkowski et al (2017). Although ancient by deep learning standards (most papers are outdated the moment they land on the arXiv 🙃), this review covers all the concepts we’ll need when looking at jets and how to tag them with neural networks.

HEPML-LivingReview. A remarkable project that catalogues loads of papers about machine learning and particles physics in a useful set of categories.

Physics Meets ML. A regular online seminar series that brings together researchers from the machine learning and physics communities.

Machine Learning and the Physical Sciences. A recent workshop at the NeurIPS conference that covers the whole gamut of machine learning and physics (not just particle physics).

Graph Neural Networks in Particle Physics by J. Shlomi et al (2020). A concise summary of applying graph networks to experimental particle physics - mostly useful if we have time to cover these exciting architectures.